-

Primary Widget Area

-

This theme has been designed to be used with sidebars. This message will no

longer be displayed after you add at least one widget to the Primary Widget Area

using the Appearance->Widgets control panel.

- Log in

Flight Planning and GSD Calculator (Beta)

Posted in Setup Operation, Setup Operations, Setup Operations, Setup Operations

Tagged Canon 5D Mark II, Photogrammetry, Photoscan, Photoscan Pro

Nikon D200 IR Calibration Values

Below are camera calibration values for the CAST Nikon D200 IR camera with Nikkor 28 mm lens. For projects requiring highest accuracy it is recommended that you perform your own calibration, otherwise these values can be used.

PhotoModeler v2012 Calibration Values (August 2013, F-stop of f/8, overall RMS 0.218 pixels):

– Focal Length: 29.630373 mm

– Xp: 11.836279 mm

– Yp: 8.051145 mm

– Fw: 23.999451 mm

– Fh: 16.066116 mm

– K1: 1.435e-004

– K2: -1.481e-007

– P1: 5.659e-006

– P2: -1.311e-005

Calibration Values for PhotoScan (converted from PhotoModeler values using Agisoft Lens):

– fx: 4.7804273068544844e+003

– fy: 4.7803319847309840e+003

– cx: 1.9096258642704192e+003

– cy: 1.2989255852147951e+003

– skew: -8.0217439143733470e-004

– k1: -1.2545715047710407e-001

– k2: 1.5266014429827224e-001

– k3: -7.6547741407995973e-002

– p1: 3.5948192692070413e-004

– p2: -1.5418228415832919e-004

Posted in Uncategorized

Tagged Camera Calibration, Nikon, Nikon D200 IR

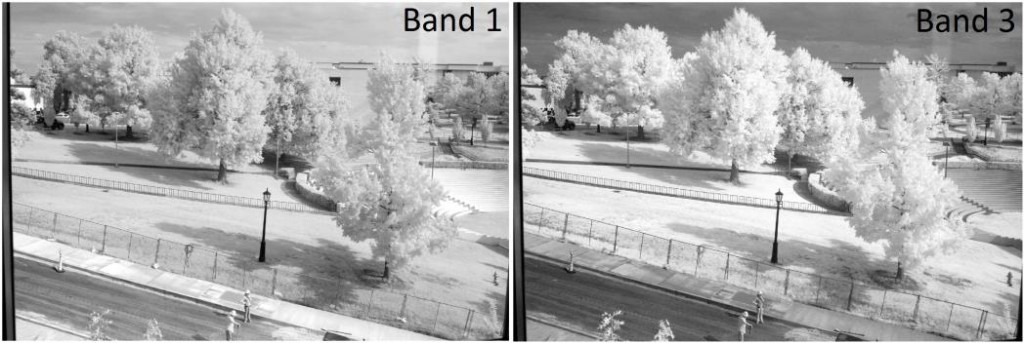

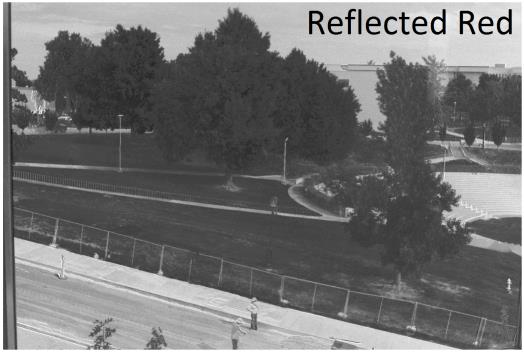

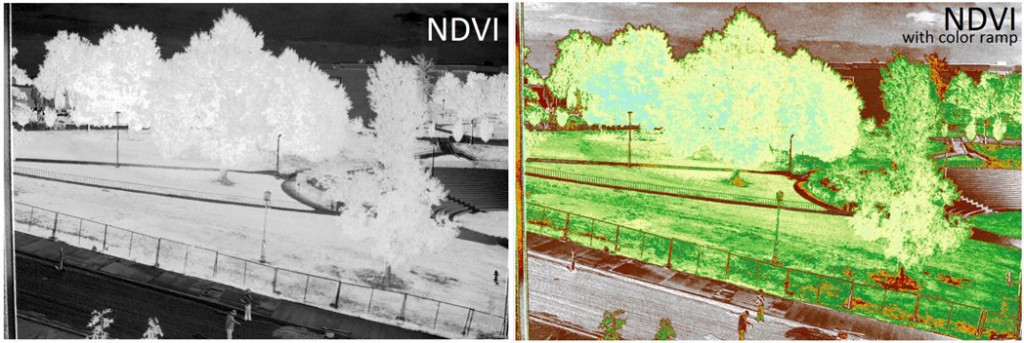

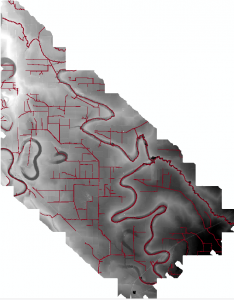

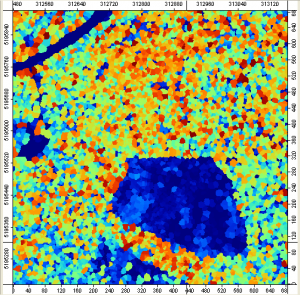

Pseudo-NDVI from Nikon D200 IR Images

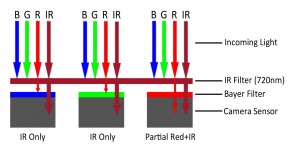

Because of the light transmission properties of the filter installed in the CAST Nikon D200 IR DSLR camera, it is possible to isolate infrared and red light into separate channels. These channels can then be used to generate an NDVI image using the well known formula:

NDVI = (NIR – Red) / (NIR + Red)

We’re calling this pseudo-NDVI for a couple reasons; 1) the IR filter installed only captures a portion of the visible red (probably less than half), so this bit of information is relatively weak, and 2) our method of isolating the “reflected red” (Band1 – Band3) is overly simplistic. However, we have tried more complicated methods (e.g. principal components, also averaging bands 2 and 3) but results did not improve. Without more work, the results of this process should not be compared to calibrated NDVI images.

1. Load (individually) bands 1 and 3. Band 1 represents Red+IR, Band 3 is IR only.

2. Run the Raster Calculator tool to produce a “reflected red” raster using the following raster math:

Band1 – Band3

3. Run the Raster Calculator tool to produce NDVI raster using:

(Float(“Band3”) – Float(“ReflectedRed”)) / (Float(“Band3”) + Float(“Reflected Red”))

Note: If you don’t use the Float functions in step 3 you end up with integers (-1,0,1), which are useless.

Posted in Setup Operations

Tagged NDVI, Nikon D200, Nikon D200 IR

Specific Settings for Nikon D200 and Close-Range Photogrammetry

Along with the generic advise given in the Acquire Images for Close-Range Photogrammetry and Custom White Balance for Nikon D200 IR posts, here are some important settings to consider when using the standard or IR modified Nikon D200 cameras:

– Rotate Tall: Set “Rotate Tall” to Off if the images are to be used for photogrammetry or GIS applications

– Image Quality: Use either the “NEF (RAW)” or “NEF (RAW)+JPEG” image quality setting. Capturing RAW images will preserve all image information and give you much more control over editing later

– Image Size: Set to “Large 3872×2592/10.0M”

– Optimize Image: Use these “Custom” settings and adjust as needed:

–Image Sharpening = None

–Tone Compensation = Normal (0)

–Color Mode = III

–Saturation = Normal (0)

–Hue Adjustment = 0

–Make sure you select “Done” after making adjustments to theses settings or they will be lost.

– Color Space: Should be set to “sRGB”

– JPEG Compression: For highest quality set to “Optimal Quality”

– RAW Compression: If memory card space is not an issue, set to “NEF (RAW)” for no compression. Otherwise turning on compression will cut the size of RAW images from 16MB to 9MB

– Intvl Timer Shooting: This setting can be used to take a predetermined number of images with a certain amount of time between each shot. To use this setting, three setting must be set:

1. Start: Two options exist including “Now” (starts taking images right away) or “Start Time” (allows you to set a time (e.g. 13:00, aka 1pm). If using Start Time, make sure the cameras time setting is correct

2. Interval: Sets the amount of time between each interval using hours, minutes, and/or seconds (one second minimum)

3. Select Intvl*Shots: This setting ask you to set 1. the total number of intervals and 2. number of images at each interval. If mounting the camera to the octocopter for example, one could set the Interval to 10 seconds, the total number of intervals to 50, and the number of images at each interval to two. This would result in 100 total images, with two at each of the 50 positions (interval)

Posted in Setup Operations, Setup Operations

Tagged close range scanning, Nikon, Nikon D200, Photogrammetry

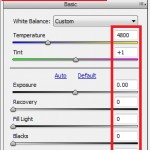

Custom White Balance for Nikon D200 IR

Because some red light is transmitted by the IR filter, any of the standard white balance settings will produce reddish images. A custom white balance using the “White Balance Preset” option can be used for better images. It’s important to note that the white balance setting is only applied to JPEG images saved on the memory card – RAW images are saved to the memory card exactly as they are recorded by the image sensor, without any processing. However, even when capturing RAW images, the image preview on the camera’s LCD will use the white balance setting, and will therefore be easier to evaluate exposure in the field.

To use the White Balance Preset option, follow these instructions to capture an appropriate custom WB image:

1. Hold down the “WB” button and rotate the rear wheel to select the “PRE” setting, release WB

2. Hold down the “WB” button until “PRE” begins to flash

3. Use the shutter release button to capture a photograph of healthy green grass in similar lighting as your intended subject (e.g. direct sun)

4. The camera should indicate “Good” or “No Gd” – if “No Gd” try again

5. If the camera indicates Good, take a test image to visually check your white balance

Posted in Nikon D200 IR, Setup Operations

Konica-Minota Vivid 9i – Check List

Note: The Konica Minolta VIVID 9i is best suited for indoor use. It will not work in direct or indirect sunlight. Any planned outdoor scanning should be done at night or under a blackout tent. The Minolta is also meant to be plugged in, so a generator or alternate power source is required when working outdoors.

___ VIVID 9i Scanner (Large orange hard case)

___ Turntable – optional (Medium black hard case)

___ Manfrotto tripod

___ Laptop with Polyworks installed (with REQUIRED Plugins Add-on). If you are going to be off the network, then you must borrow a license from the Polyworks license server to be able to use the software. Note: The laptop must be 32 bit with Windows XP with a PCMCIA card slot and serial connection. The Panasonic Toughbook is most commonly used with VIVID 9i and has the required configuration.

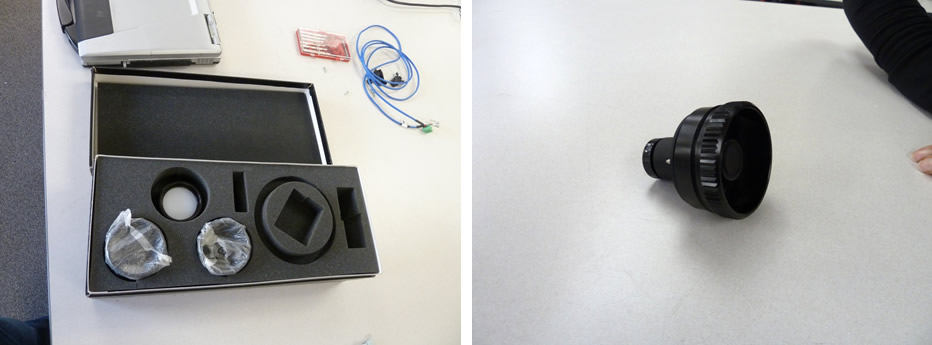

___ Black lens box

___ Small black case with cables and accessories

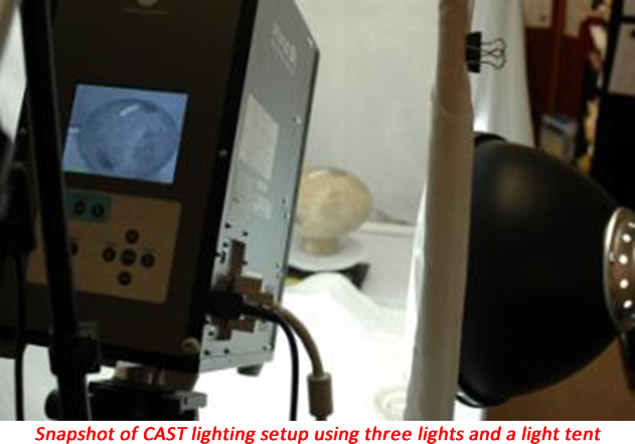

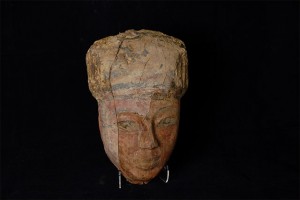

___ Additional lighting – optional (not shown above) Note: In addition to capturing surface information and detail of an object, the VIVID 9i also captures color (RGD) data. If the color properties of an object are important to your project, then we advise to use additional lighting to ensure more accurate color capture. CAST has a three light setup that uses white flicker-free flourescents that is available for checkout. If color is important – use good lighting (it can make all the difference).

Posted in Uncategorized

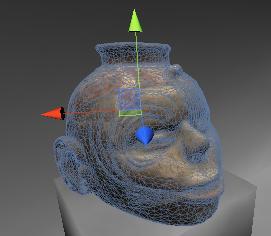

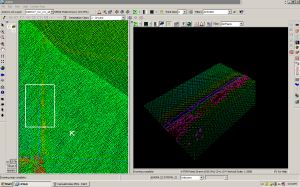

Konica-Minota Vivid 9i – Scanner Settings & Data Collection

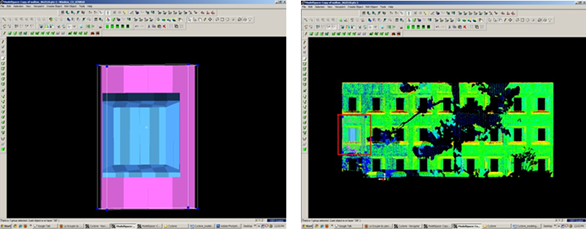

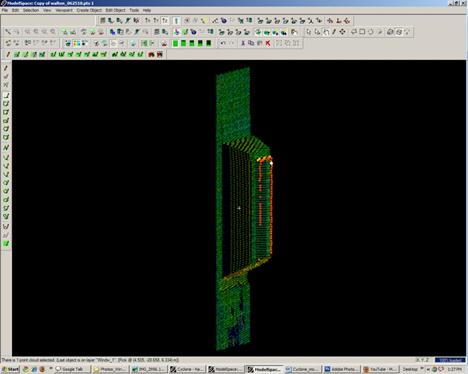

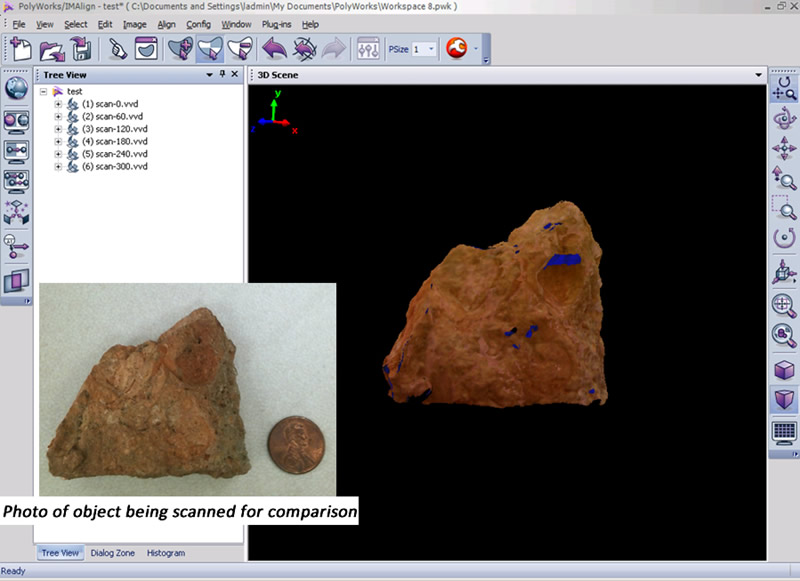

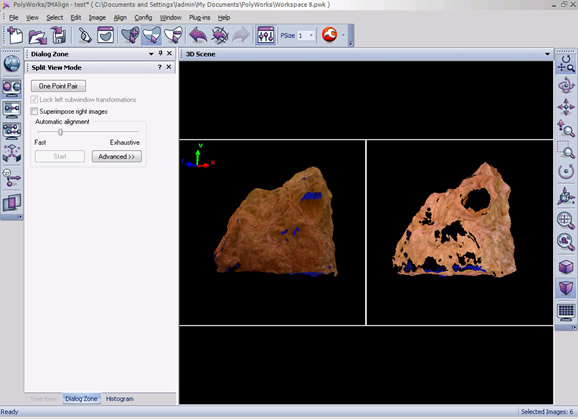

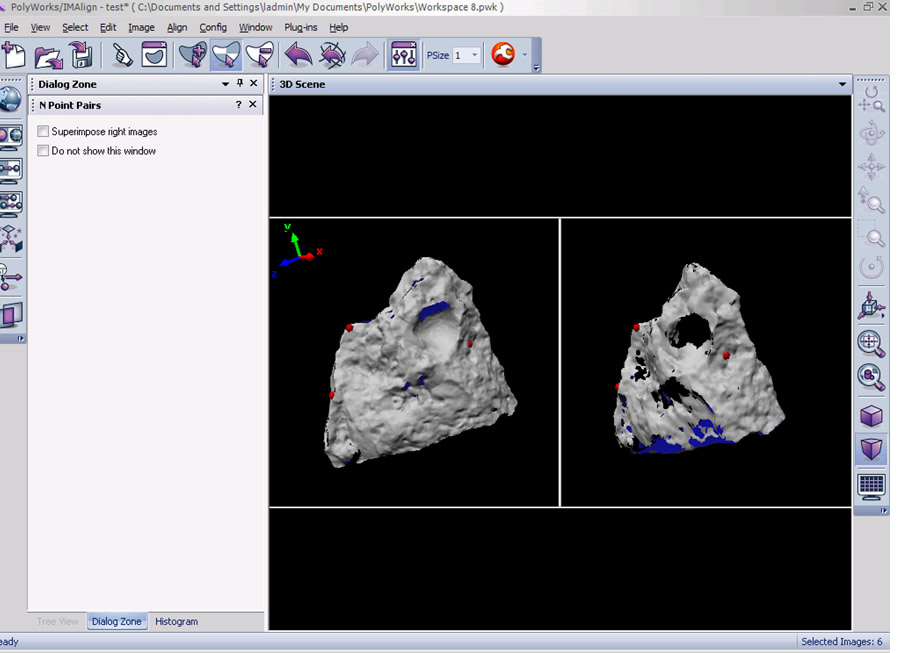

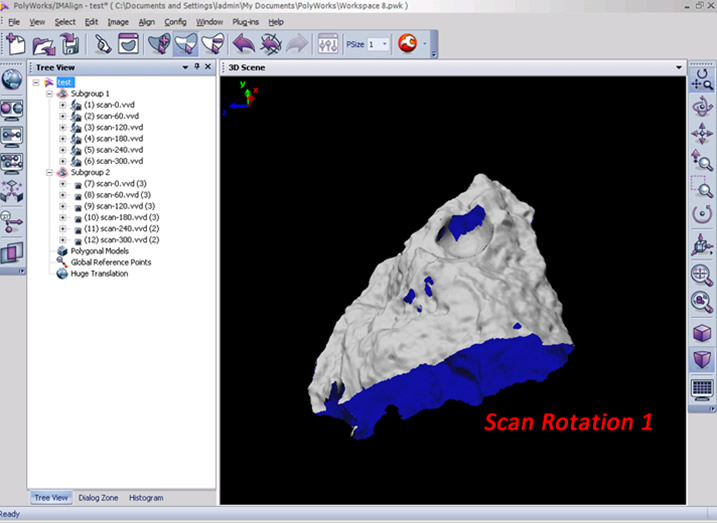

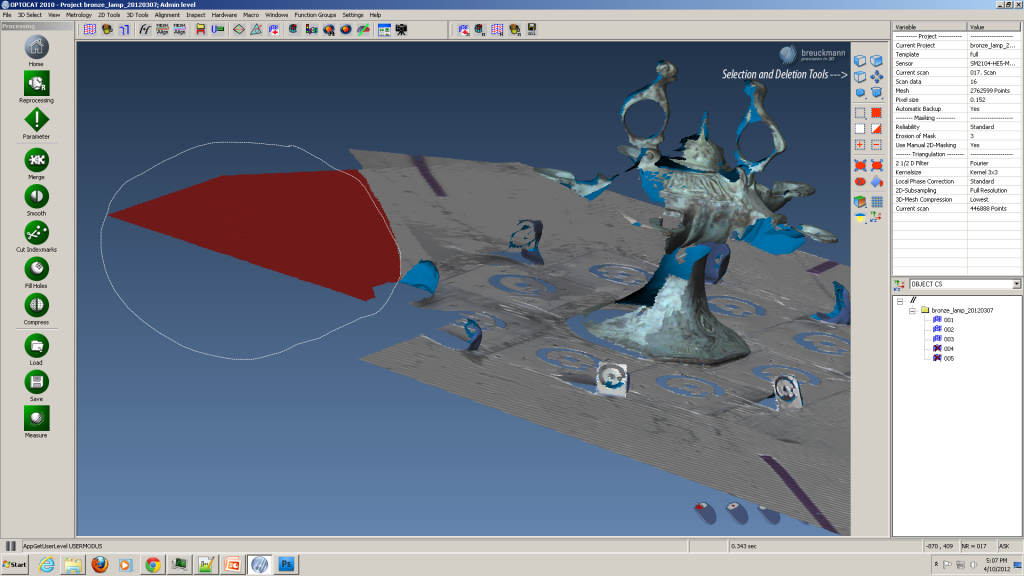

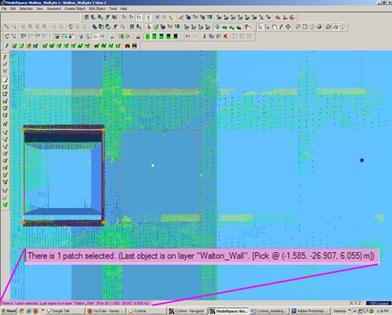

This document guides you through scanner settings and collection procedures using the Konica-Minolta Vivid 9i, a turntable and Polyworks IMAlign software.

Hint: You can click on any image to see a larger version.

[wptabs style=”wpui-alma” mode=”vertical”] [wptabtitle] CALIBRATION [/wptabtitle]

[wptabcontent]

1. White Balance Calibration: Before you begin scanning, you need to perform the white balance calibration on the scanner. Make sure the lighting conditions in your environment are set to what they will be during the scanning process.

A. Next, remove the white balance lens from the lens box and screw it onto the top front of the scanner.

B. Press the Menu button the back of the scanner and select White Balance and press Enter. Under the White Balance menu, choose Calibration and press Enter. Do not touch or pass in front of the scanner while it is performing the calibration.

C. When the calibration is complete, remove the white balance lens and put it back in the lens box. You are now ready to begin your scanning project.

[/wptabcontent]

[wptabtitle] TITLE OF SLIDE [/wptabtitle] [wptabcontent]

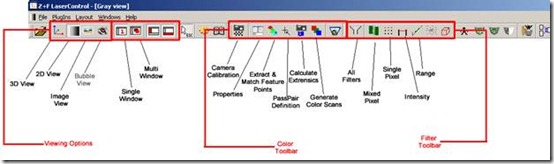

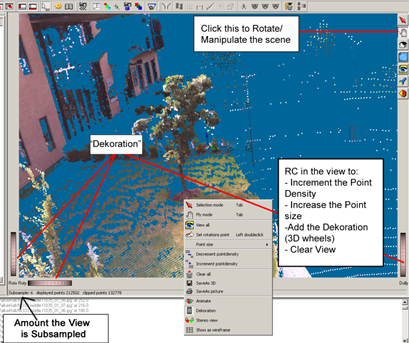

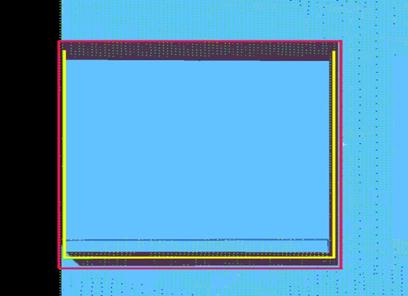

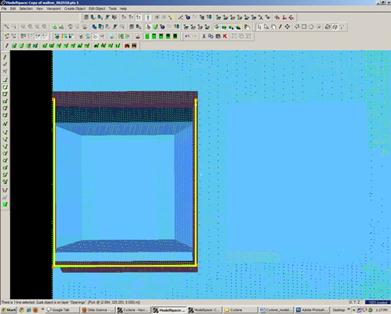

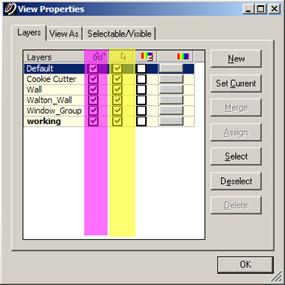

2. Open Polyworks IMAlign (v 10.1 in this example) and select the Plugins menu – Minolta – VIVID 9i – Step Scan. The One Scan option is used if you are not using the turntable. If you do not see the Plugins menu, you will need to install the Plugins Add On from the Polyworks installation directory.

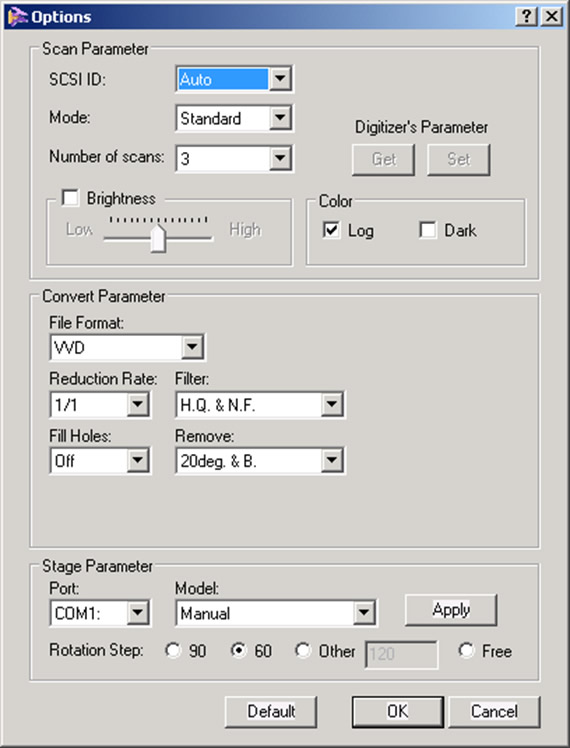

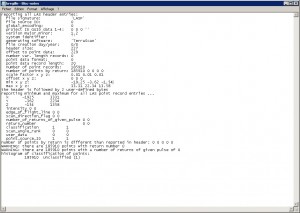

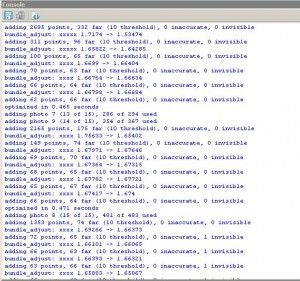

3. When the VIVID 9i window opens, you should see a live view from the scanner camera. Select the Options button on the right. Under Scan Parameters, select Standard Mode. Extended mode offers a greater scan range however it limits noise filtering operations. Standard mode should work for most projects. The number of scans are the number of passes the scanner makes per scan position. From the manual: “It averages the data from each pass to produce a single scan file therefore more scans theoretically will give you the most accurate data. We recommend 3 or 4 scans, however if time constraints are an issue then this number may be lowered.

4. Under Convert Parameter, check the VVD File Format and a Reduction Rate of 1/1 (No data reduction). Under filter, select H.Q. & N.F. (High quality and noise filter). This is the highest filter setting and is most effective for minimizing scanner noise. Also, make sure Fill Holes is Off and under Remove, it is generally advised to select 20deg & B. (20 degrees and Boundary). This last parameter removes data around the perimeter of a scan that tends to be less reliable. In previous experiences, it was noted the data around the scan edge often curves up creating an artificial lip in the data. Researchers found that increasing the Remove parameter to the highest setting, removed the “scan edge lip.” All of the settings above are just suggestions and may need to be altered to suit individual project needs.

5. Under Stage Parameter – Model, select Parker 6105 (Fast); this selects the correct turn table model. Finally select the desired Rotation Step. A Rotation step of 60 is suggested which will result in collecting 6 scans per object rotation.

6. Hit Apply and then OK.

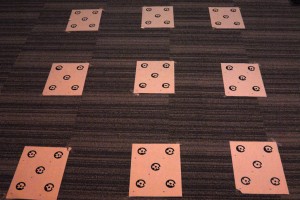

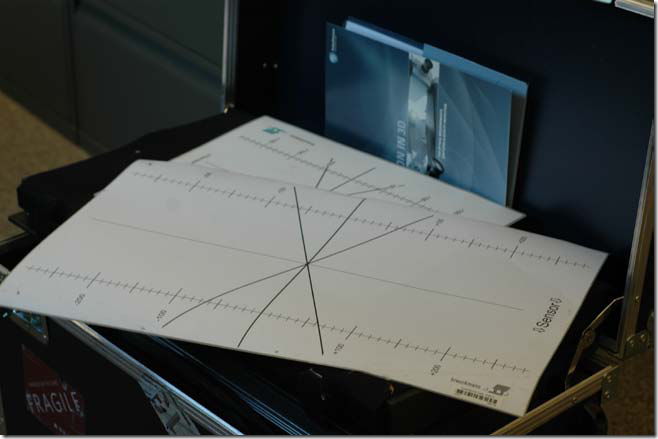

7. Back in the VIVID 9i Window, select the Stage Apply button to apply the stage parameters. Next, we will calibrate the turntable by using the black and white calibration charts found in the turntable case.

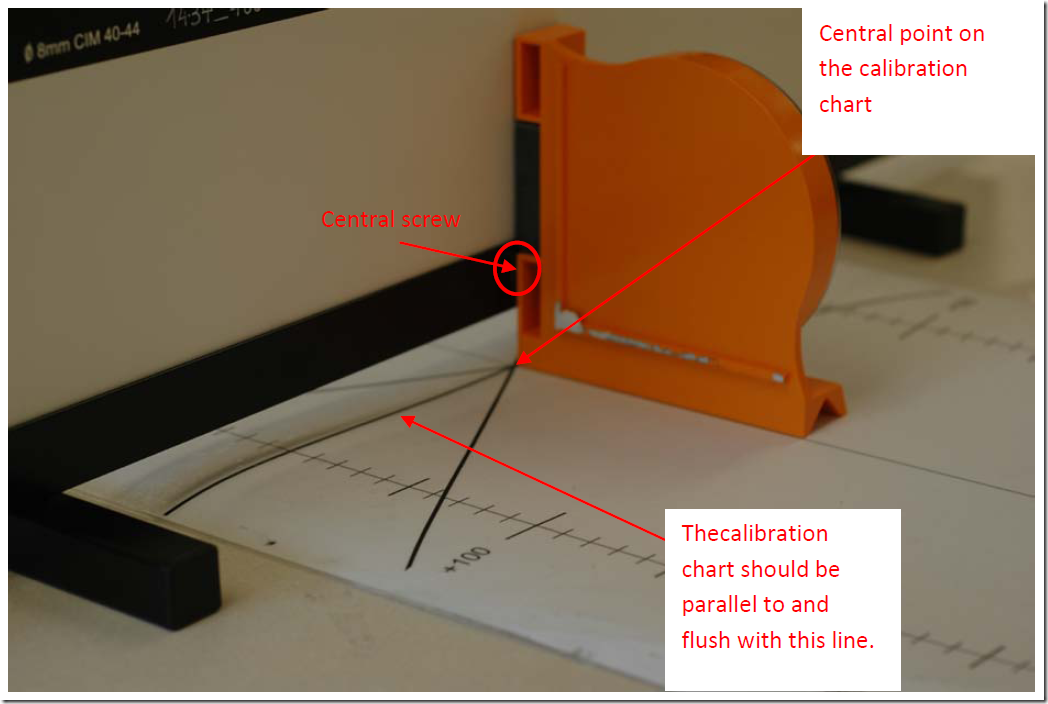

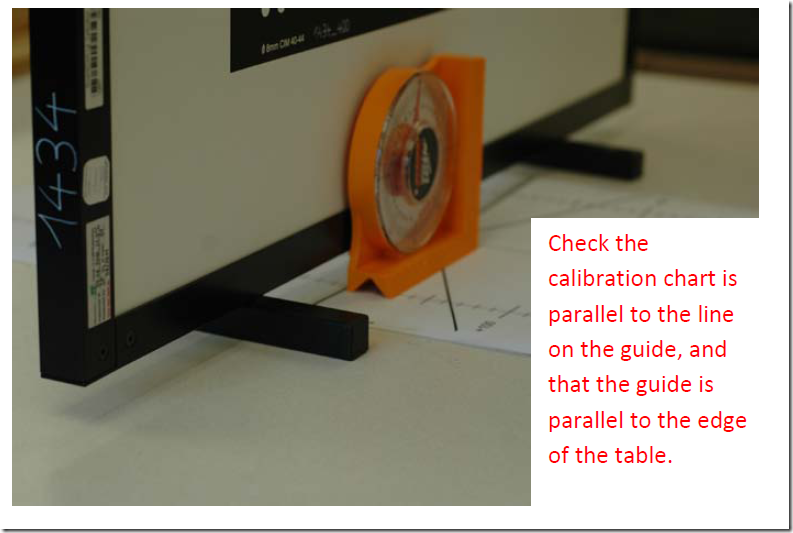

8. The smaller chart is used with the tele lense and the larger chart is used with both the mid and wide angle lenses. Place the appropriate calibration chart on the system turntable facing the scanner. The smaller chart has two pegs that fit associated holes on the turntable. The larger chart also has two smaller pegs that rest in a recessed ring on the turntable. In either case, it is important for the center of the chart to rest in the center of the turntable and for each chart to be completely upright. The black line that runs down the center of each chart is used to identify the center axis of the turntable which is then used to coarsely align or register the scans from a single scan rotation. In this example, the tele lense and small chart will be used.

9. You should now see the scanner chart in the live view window. It is recommended to fill the view with the scan chart as best as possible. You may need to adjust the scanner position and rotation to achieve the correct view. NOTE: You will not be able to move the scanner after you perform the turntable calibration. If you do move the scanner after this step, then the turntable will need to be re-calibrated.

10. Next, hit the Chart Scan button. The scanner will then scan the chart and indicate whether or not the calibration was successful. It is not necessary to store the chart scan.

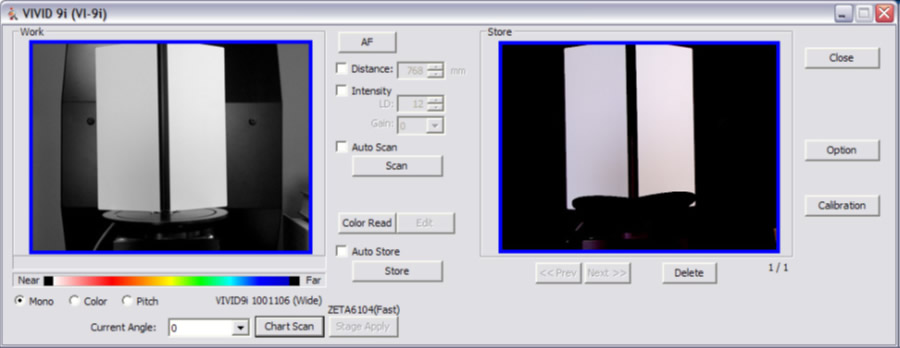

11. You are now ready to begin scanning. Place the desired object on the center of the turntable. It is advised to perform a single scan to test system perameters before completing a scan rotation. First, select the AF (Auto Focus button). This auto focuses the scanner camera and determines the object’s distance from the scanner, displaying the value in the Distance box. The Distance value can be altered to acquire object data at a specified distance. Next, press the Scan button. Once completed the scan should appear in the Store window on the right. Check the scan for any anomalies such as holes, poor color, etc. If you wish to keep the scan, you MUST click the Store button. If you do not click the Store button, the scan will be overwritten. If you are happy with the scan results, then you are ready to proceed with a scan rotation.

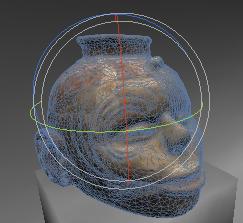

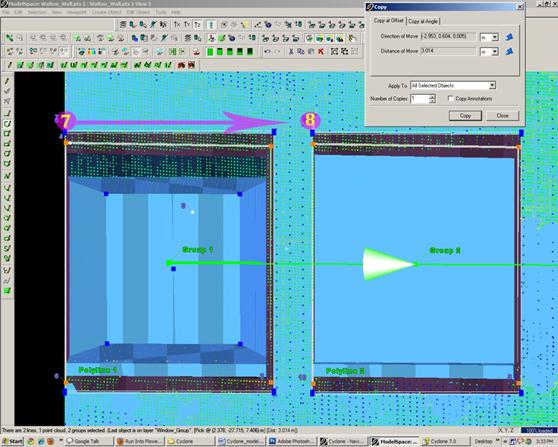

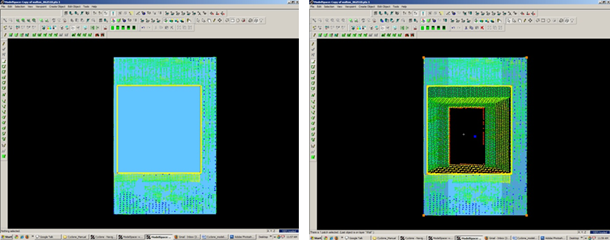

12. To perform a scan rotation, first select the Auto Scan and Auto Store options. Next, press the Scan button. The scanner will perform 6 scans rotating 60 degrees between each scan and will automatically store the results in the IMAlign project. Once the scans are complete, click in the IMAlign project window to view the results.

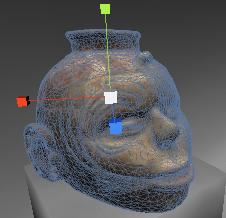

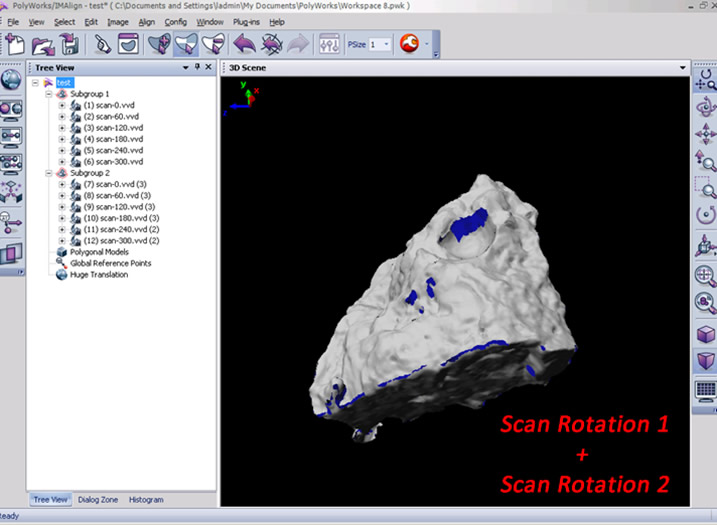

13. The six scans are displayed in the Tree view (table of contents) on the left with the name “scan” followed by a suffix indicating the scan angle (0, 60, 120, etc…) and represent one complete scan rotation. These scans have been roughly aligned to one another based on the central axis of the system’s turntable. We recommend to clean and align scans as you go as it takes little additional time and it ensures that all of the required data has been captured (and all holes/voids have been filled in).

14. Using the middle mouse button, select and delete any data that are not associated with your scan object. Next, make sure all of your scans are unlocked and run a Best Fit alignment. The Best Fit operation is an iterative alignment that produces a more accurate alignment between the scans.

15. Now that the scan rotation has been cleaned and better aligned, prepare for the next scan rotation by first locking all of the scans of the first rotation (select all in the Tree View, right click and select Edit – Lock or use Ctrl+Shft+L) and then by grouping the scans together (select all in the Tree View, right click and select Group). Now observe the data that you have collected, note holes or voids in the digital object, and identify what areas need to be scanned next. If you are scanning a large object, perhaps you will need to perform another scan rotation to acquire more of the object or if it’s a smaller object, perhaps a few single scans are needed in order capture the bottom and top views of the object. Reposition the object on the turntable to prep for the next scan sequence.

16. Next, return to the scan window and complete another scan/scan rotation. In this example, we will complete another scan rotation in order to show how align two rotations to one another.

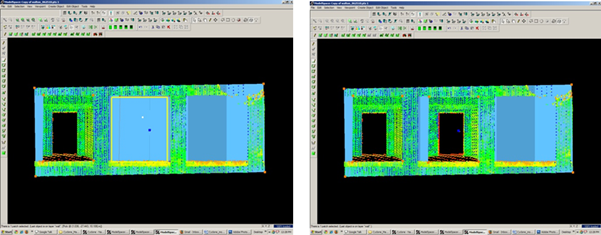

17. Because the object has been repositioned, it is always good to Auto Focus (press the AF button) before each scan/scan rotation. Complete the second scan or scan rotation.

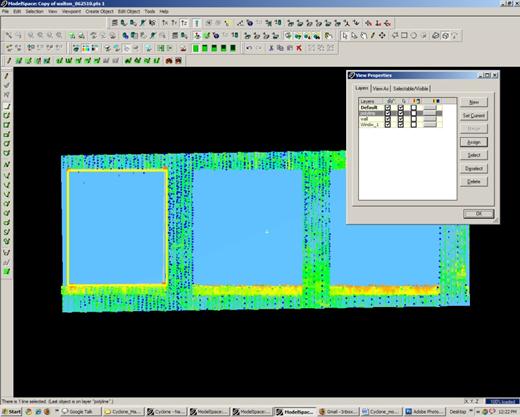

18. In the IMAlign window, you may have to hide the first scan rotation (simply middle click on the group name or right click the name and select View – Hide or use Strl+Shft+D) to more easily view the new data. Go ahead and delete any extraneous data from the scene and group the scans from the second rotation (select all, right click and select Group).

19. To align the second rotation to the first rotation, first unhide the first rotation (middle click the group name or right click and select View – Restore or use Ctrl+Shft+R). Next select the split view alignment button and independly rotate each view so that they are roughly the same perspective of the scan object .

20. Now select the N Point Pairs button (Align Menu – N Point Pairs) and identify at least three pick points between the two views. Right click when complete and you should now see the second rotation roughly aligned to the first. Run a best fit alignment to optimize the alignment and then lock the second scan group. This is a very basic description of alignment in IMAlign, for more detail please refer to the Registering (aligning) Scan in Polyworks IMAlign workflow.

21.

- Complete as many scans/scan rotations that are necessary in order to get a complete digital model of your object. Small holes can be holefilled with additional post processing in IMEdit.

22. Refer to the Registering (aligning) Scan in Polyworks IMAlign workflow for further details on scan alignment and the Creating a Polygonal Mesh using IMMerge workflow for more information on creating a polygonal mesh file in Polyworks.

[/wptabcontent]

[/wptabs]

Posted in Uncategorized

Konica-Minolta Vivid 9i – Setup with turntable

This document will guide you through setting up the Konica-Minolta for use with a turntable.

Hint: You can click on any image to see a larger version.

[wptabs style=”wpui-alma” mode=”vertical”] [wptabtitle] TRIPOD & MOUNT [/wptabtitle]

[wptabcontent]

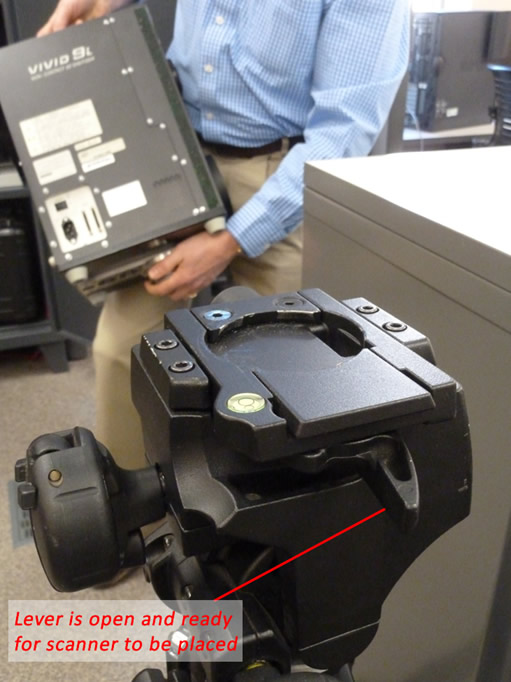

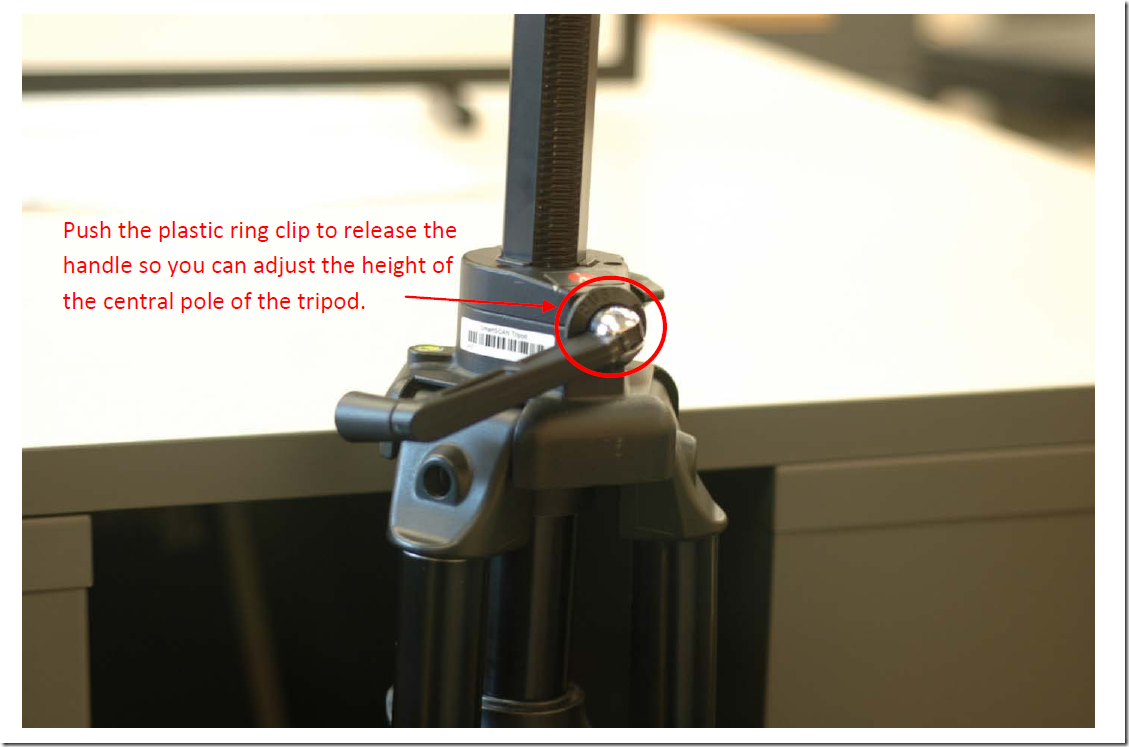

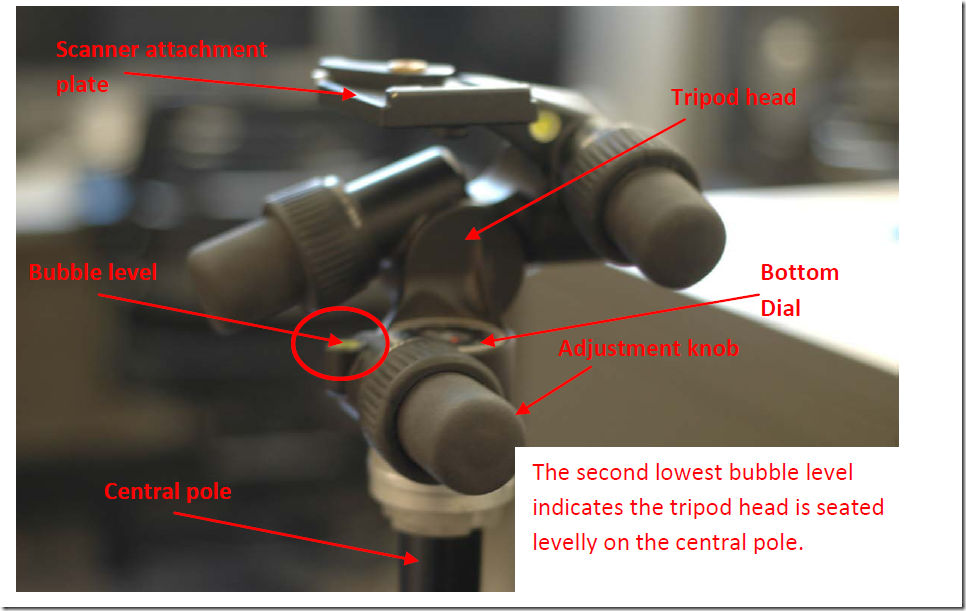

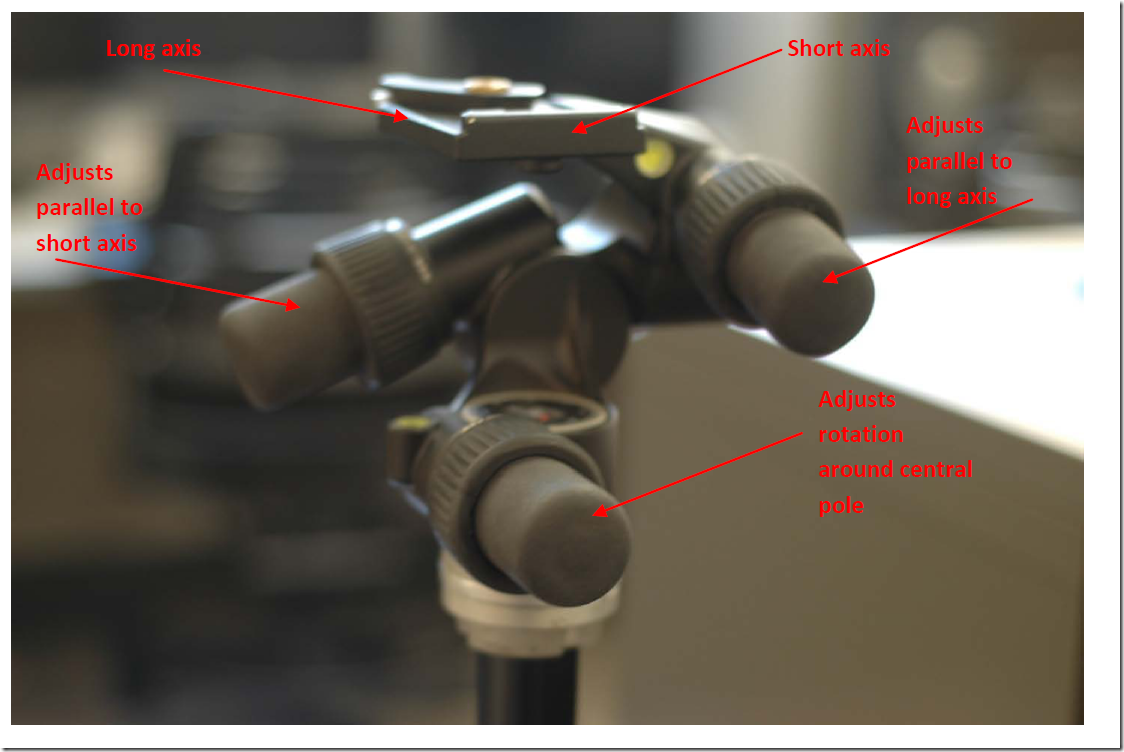

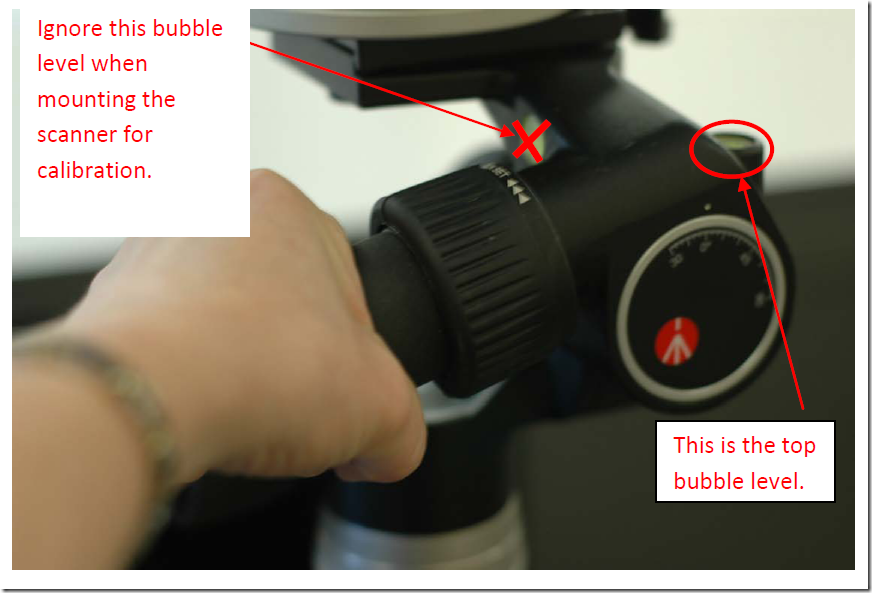

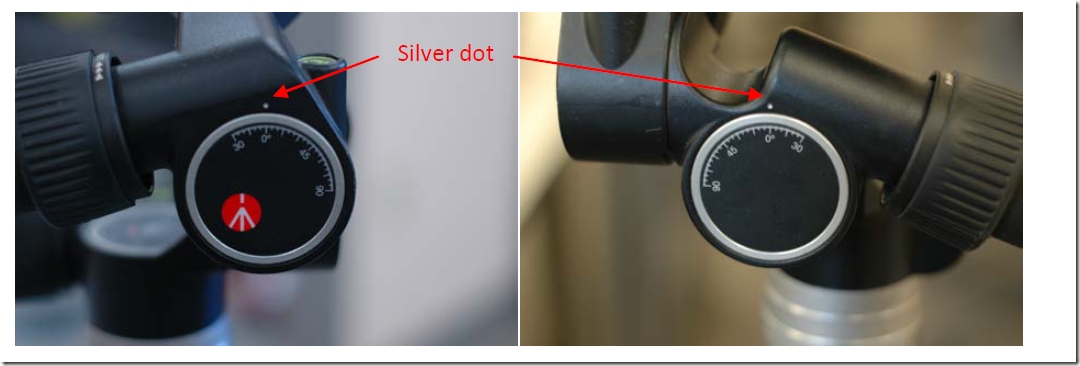

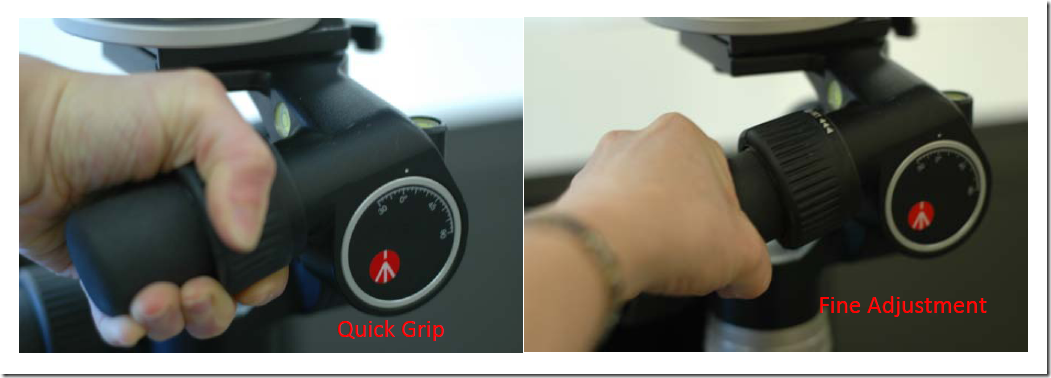

1. Setup the scanner tripod. The tripod doesn’t have to be completely level but you want it to be stable so some degree of leveling is required.

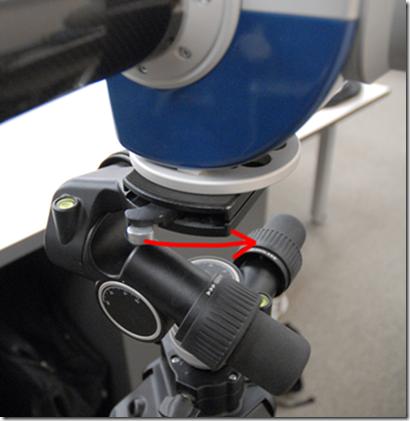

2. Prep the scanner mount by pulling the black lever out, pushing up the gold pin, and then releasing the lever. This widens the slot where the scanner mount rests.

When done correctly, the lever will continue to stick out.

[/wptabcontent]

[wptabtitle] CONNECT SCANNER [/wptabtitle] [wptabcontent]

3. Place the scanner on the tripod until it locks into place (you will hear a ‘click’ sound when the mount has locked). Once the scanner has been mounted, ensure that your setup is stable.

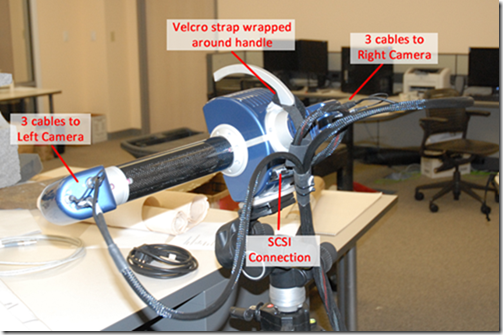

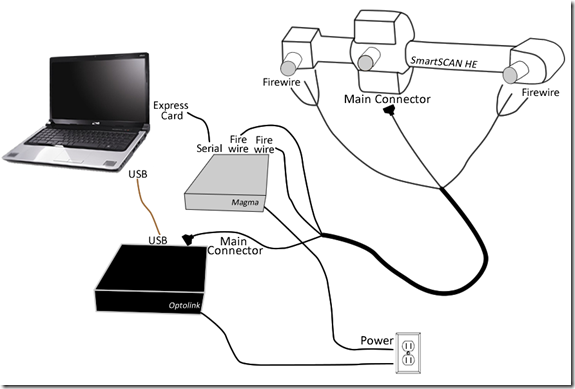

4. Remove the power cable and gray SCSI cable with the PCMCIA card adapter from the small black case. Plug the scanner power cable in and connect the SCSI cable. DO NOT TURN THE SCANNER ON.

5. Remove the card insert from the PCMCIA slot on the laptop and plug the PCMCIA card into the laptop.

[/wptabcontent]

[wptabtitle] SETUP TURNTABLE [/wptabtitle] [wptabcontent]

6. Remove the turntable, black power box, and all cables from the black case. Set the two, black and white calibration charts to the side for now. Place the turntable approximately 1 meter (or slightly less) from the scanner with the corded end facing towards the front i.e. towards the scanner. The suggested scan range for the tele and mid lenses is .6 to 1 meter. When you begin scanning, the distance parameter will be displayed in the scan window. We generally suggest to keep the distance in the range of 700-800 mm to avoid data loss that can occur when an object is either too close or too far from the scanner.

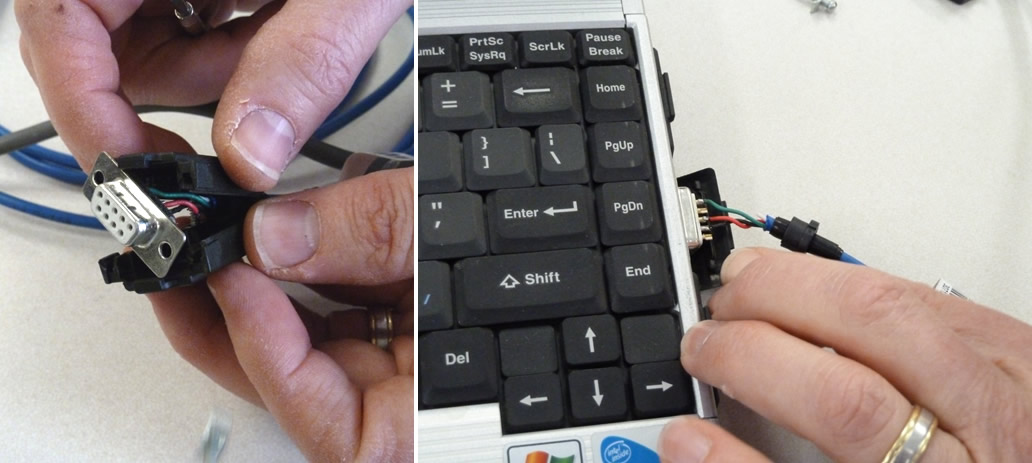

7. Plug the gray cable from turntable into the black power box; also plug the power cable into the black power box. Next, take the blue serial cable and plug the green end (with 4 prongs) into the COM1 port on the black box. NOTE: Theprongs on the green end are directional and will face down.

[/wptabcontent]

[wptabtitle] CONNECT LAPTOP [/wptabtitle] [wptabcontent]

8. Next, take the other end of the serial cable and plug it into the serial connection on the laptop. To work with a laptop, you will probably have to remove the black case around the plug end using a small screwdriver.

[/wptabcontent]

[wptabtitle] POWER UP SCANNER [/wptabtitle] [wptabcontent]

9. Once everything has been plugged in and you have the desired lens in place (see the Changing Lenses section in the final slides of this post if you need to change the scanner lens). Next power on the scanner. It will take a minute to load, when it is complete, it will display the following message on screen “Please open laser barrier and press any key.”

10. Next, pull the large round cap off of the front of the scanner (bottom) and remove the lens cap (top), and then press any button on the back of the scanner to continue.

[/wptabcontent]

[wptabtitle] OPEN POLYWORKS [/wptabtitle] [wptabcontent]

11. Next power on the laptop and log on. Note: It is important to power the scanner first and then the laptop so the connection is established.

12. Once everything has been turned on, open Polyworks on the laptop. Once the Polyworks Workspace Manager opens, open the IMAlign Module. Next, go to Scanners menu, and select Minolta – VIVID 9i. In the window that comes up, you should see a live camera view from the scanner (connection successful – hooray.) You are now ready to continue to the Konica-Minota Vivid 9i – Scan Settings post.

[/wptabcontent]

[wptabtitle] VIVID 9i LENSES [/wptabtitle] [wptabcontent]

Changing the Lenses on the VIVID 9i

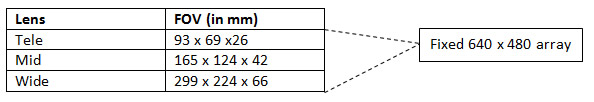

The VIVID 9i comes with a set of three interchangeable lenses: tele, middle, and wide. The scanner’s camera array is fixed 640X480 pixels. Each lens offers a different field of view with the tele being the smallest. The FOV for each lens is provided below.

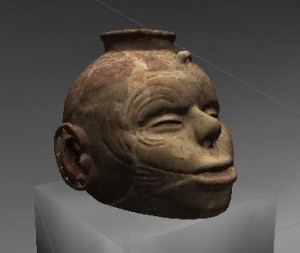

The tele lens offers the best resolution and is ideal for scanning objects smaller than a baseball or larger objects with a higher resolution. The mid lens works well for objects that are basketball size and was the lens predominantly used for scanning pottery vessels in the Virtual Hampson Museum project. CAST researchers generally do not recommend use of the wide angle lens.

[/wptabcontent]

[wptabtitle] CHANGING LENSES I [/wptabtitle] [wptabcontent]

A. To change the lens on the VIVID 9i, first make sure the scanner is turned off.

B. Next remove the lens that you wish to use from the lens box and its plastic bag.

C. Next remove the lens protector and unscrew the lens that is currently on the scanner. IMMEDIATELY place the lens cap (located on the lens box) on the back of the lens.

NOTE: Every lens should always have 2 lens caps (one of the front and back of the lens) when the lens is not in use or is being stored.

[/wptabcontent]

[wptabtitle] CHANGING LENSES II [/wptabtitle] [wptabcontent]

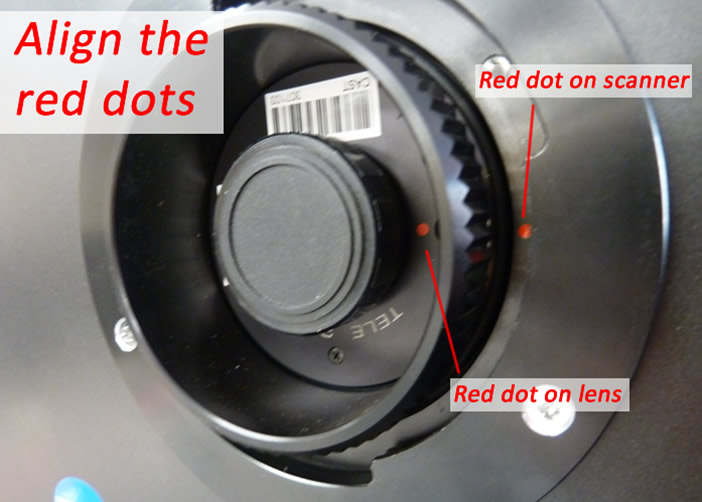

D. Remove the back lens cap from the desired lens and screw the new lens into the scanner by first lining up the red dots found on both the lens and the scanner.

E. Replace the black lens protector on the scanner. Replace both lens caps on the newly removed lens, place in a plastic bag, and store in the lens box.

F. You are ready to begin scanning. Power on the scanner and continue with Step 9 on the ‘Power up Scanner’ Slide above.

[/wptabcontent]

[wptabtitle] CONTINUE TO… [/wptabtitle] [wptabcontent]

Continue to Konica-Minota Vivid 9i – Scan Settings.

[/wptabcontent]

[/wptabs]

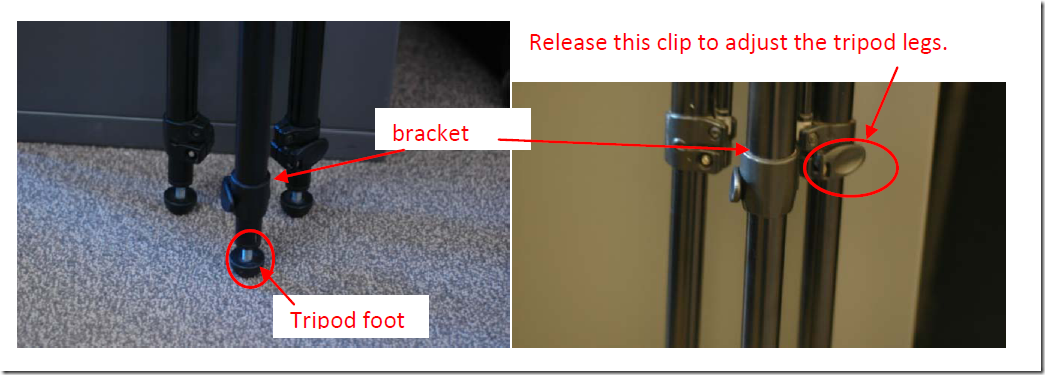

Leica GS15: Tripod Setup

This page will show you how to set up a tripod for use with a Leica GS15 GNSS receiver used as the base in an RTK survey or as the receiver in a static or rapid static survey.

Hint: You can click on any image to see a larger version.

[wptabs mode=”vertical”]

[wptabtitle] Hardware[/wptabtitle]

[wptabcontent]

You will need a GS15 receiver, a brass tripod adapter, and one of the heavy duty, fixed height, yellow tripods.

[wptabtitle] Brass Adaptor[/wptabtitle]

[wptabcontent]

Screw the brass tripod adaptor into the base of the GS15.

Stand the fixed-height tripod on its center tip, loosen the brass thumbscrew in the side of the top mounting plate, insert the brass adapter on the antenna into the mounting hole, and then tighten the thumbscrew.

[wptabtitle] Extend Center Leg[/wptabtitle]

[wptabcontent]

At the base of the tripod, flip the lever to release the center leg, and then extend it fully to the 2-meter mark.

There is metal pin attached by a wire to the base of the tripod – insert the pin through the holes in the rod at the 2-meter mark, then put the weight of the tripod on to the center pole so that the pin is pushed firmly against the clamp body. Now flip the lever to lock the clamp.

[wptabtitle] Position Tripod[/wptabtitle]

[wptabcontent]

Carefully place the tip of the center pole at the exact spot on the ground that you wish to survey.

[wptabtitle] Release Tripod Legs[/wptabtitle]

[wptabcontent]

Two of the side legs have squeeze clamps at their upper end. To release them from the tripod base, squeeze the clamp and lift the leg up so that the tip clears the base. Angle the leg away from the tripod body and flip the lever to release the lower clamp on the leg. Extend the lower portion of the leg all the way, and then lock the clamp again.

[wptabtitle] Extend Legs[/wptabtitle]

[wptabcontent]

Finally, use the squeeze clamp to extend the leg all the way to the ground, forming a stable angle with the center pole. Repeat this operation with the second leg that also has a squeeze clamp.

[wptabtitle] Secure Legs[/wptabtitle]

[wptabcontent]

The third leg is different; instead of a squeeze clamp, it uses a thumb screw to tighten the upper section.

Loosen this thumbscrew, and then extend the leg and place its tip as you did on the other two legs. Be sure to leave the thumbscrew loose. At this time, if your are on soft ground, you should use your foot to push each of the three legs (not the center pole) into the ground.

[wptabtitle] Level Tripod[/wptabtitle]

[wptabcontent]

The tripod must be completely level to get an accurate measurement. Locate the bubble level mounted on the side of the tripod. Place a hand on each of the squeeze clamps, squeeze them to release their lock, and very carefully push or pull to align the air bubble in the level within the inner marked circle.

When you have the tripod level, release both of the squeeze clamps, and check your level again. If it is still level, lock the thumbscrew on the third leg and you are finished with the tripod.

[/wptabs]

Posted in GPS, Hardware, Leica GS15 Receiver, Setup Operations, Uncategorized

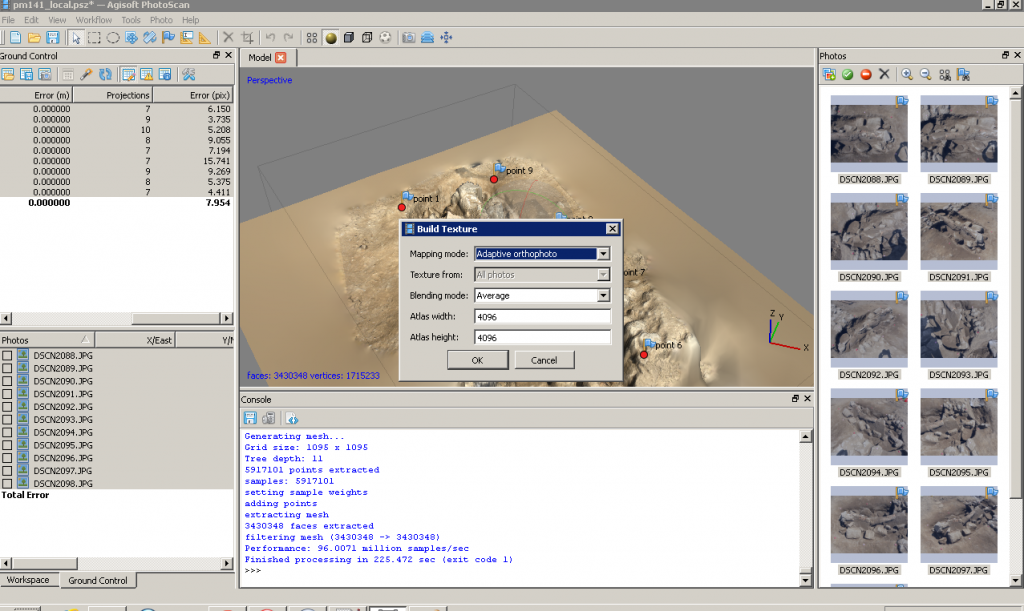

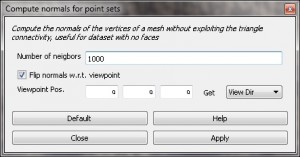

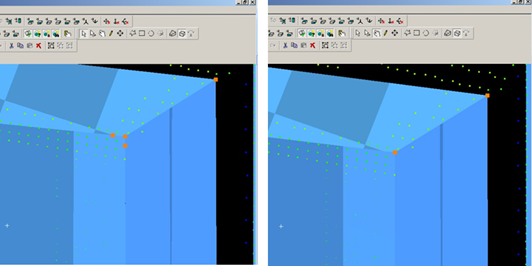

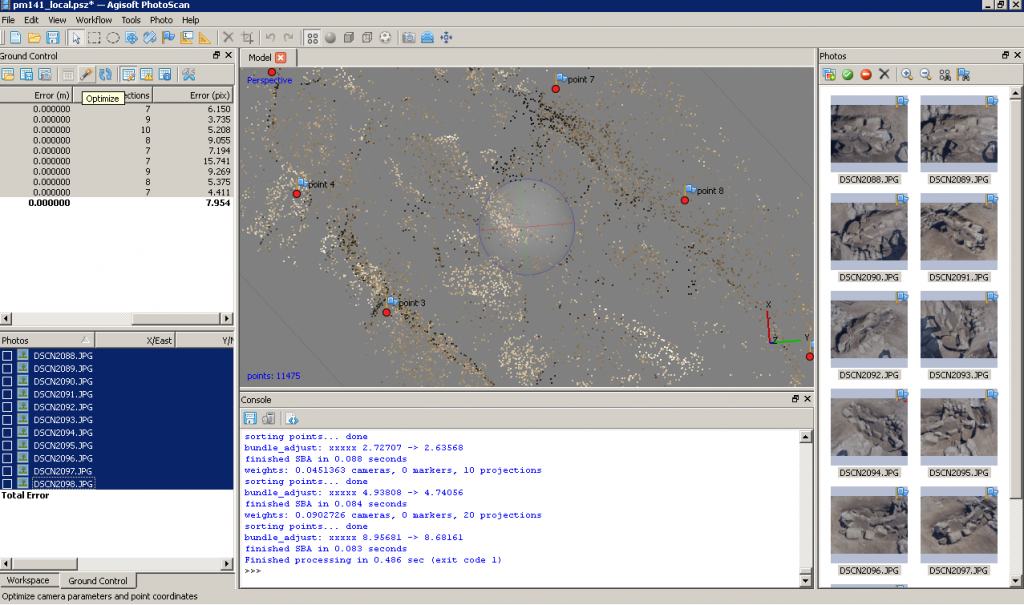

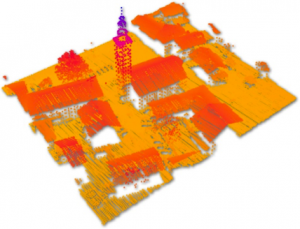

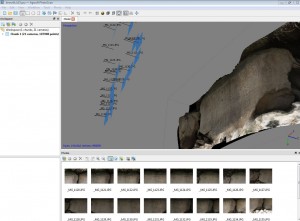

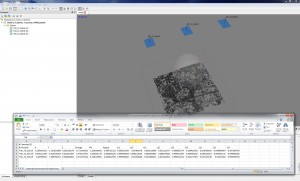

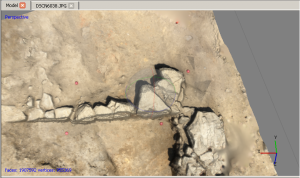

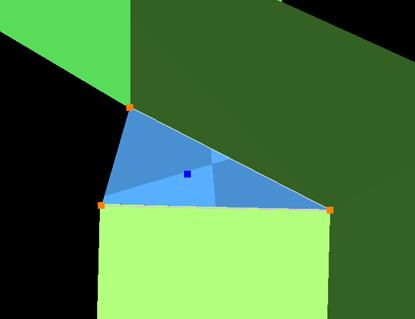

PhotoScan – Building Geometry & Texture for Photogrammetry

This post will show you how to build the geometry and texture for your 3D model and how to export it for use in ArcGIS.

Hint: You can click on any image to see a larger version.

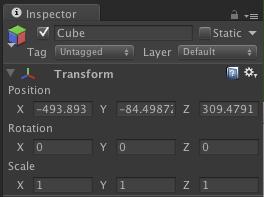

[wptabs mode=”vertical”] [wptabtitle] Rebuild Geometry[/wptabtitle]

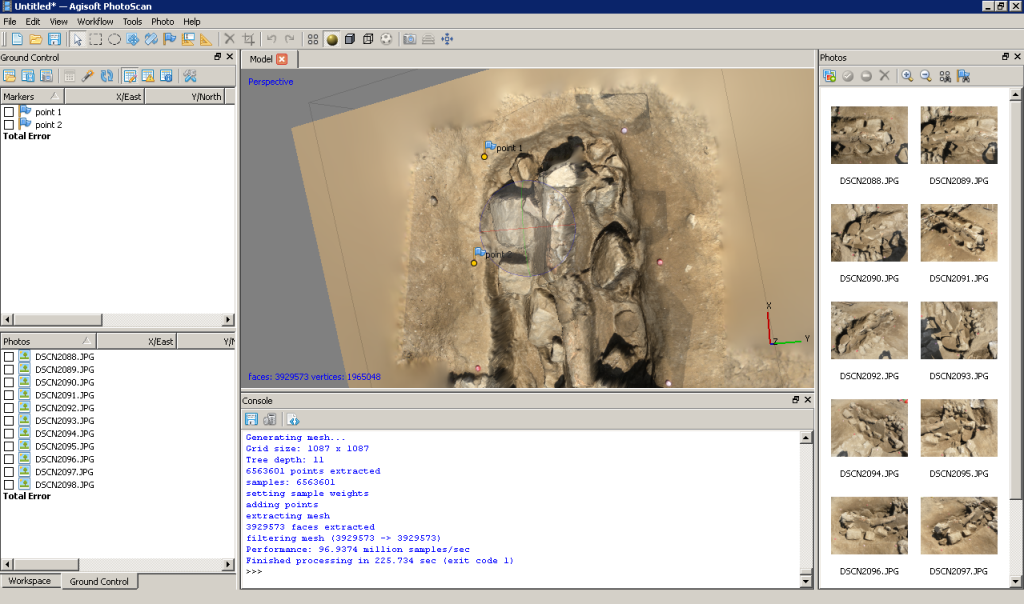

[wptabcontent]After the model is georeferenced, rebuild geometry at the desired resolution. Photoscan produces very high poly-count models, so we like to build two models, a high resolution one for archiving and measurements and a lower resolution one for import into ArcGIS for visualization and general reference. Keeping the polycount low (circa 100,000 faces) in the ArcGIS database helps conserve space and speeds up loading time on complicated scenes with multiple models. To make sure the lower poly-count model looks good, we build the textures using the high poly-count model and apply them to the lower poly-count model.

So… Select ‘Workflow’ and ‘Build Geometry’ from the main menu as before. Then select ‘Workflow’ and ‘Build Texture’.

[/wptabcontent]

[wptabtitle]Decimate the model[/wptabtitle]

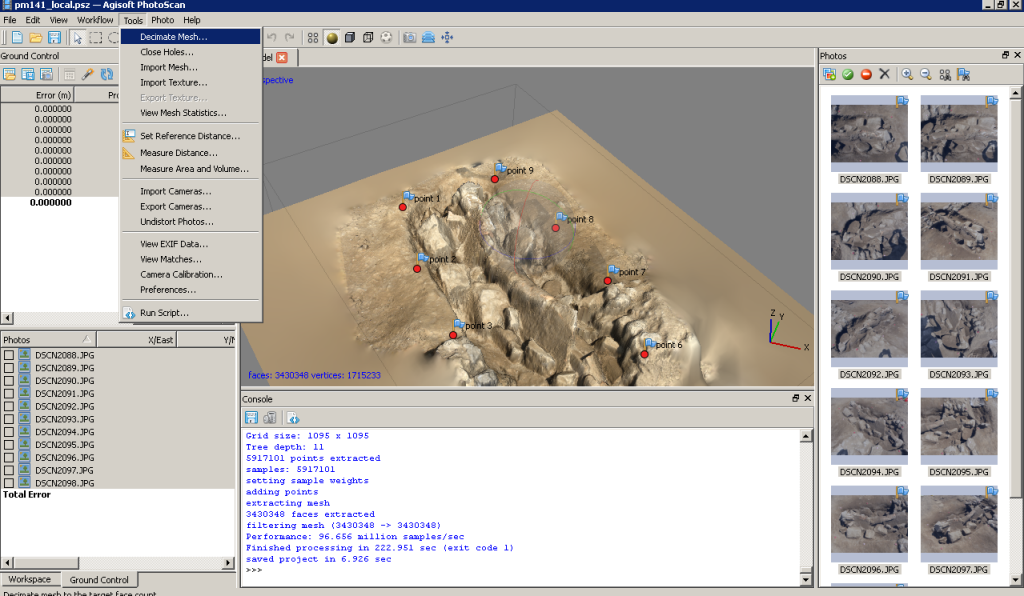

[wptabcontent]Under ‘Tools’ in the main menu you can select ‘Decimate’ and set the desired poly-count for the model. The decimated model will likely have a smoother appearance when rendered based on vertex color, but will appear similar to the higher poly-count model once the texture is applied.

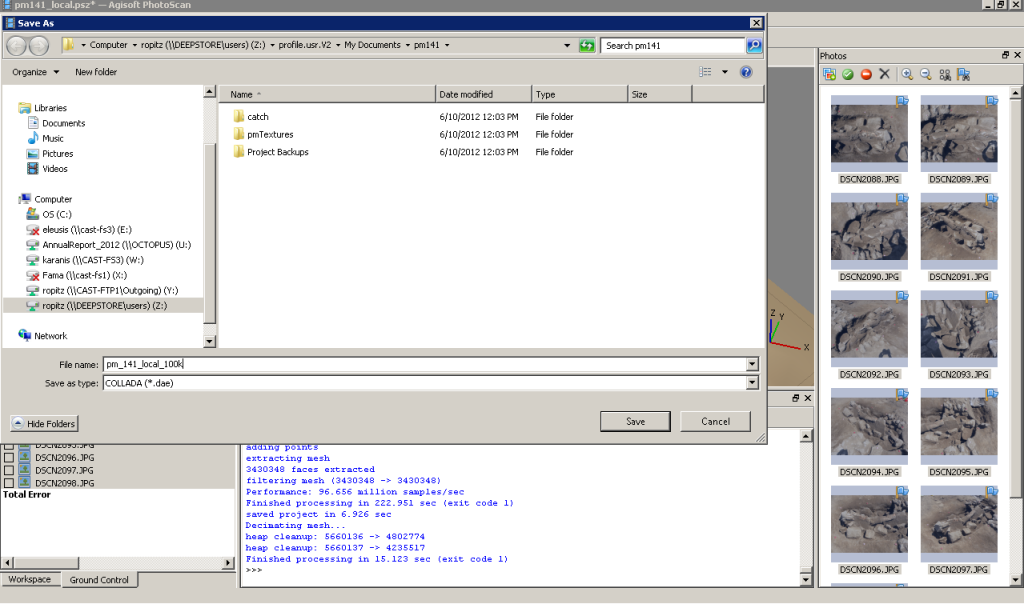

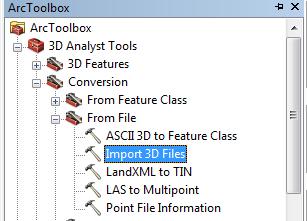

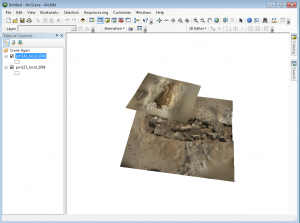

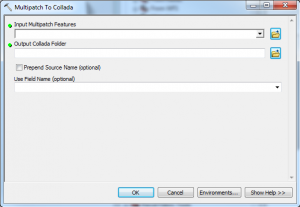

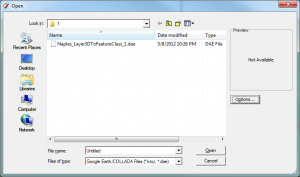

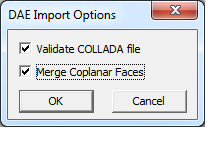

[wptabtitle]Export the model[/wptabtitle]

[wptabcontent] Export the models and save them as collada files (.dae) for import into ArcGIS. You may select a different format for archiving, depending on your project’s system. Choose ‘File’ and ‘Export Model’ from the main menu.

[/wptabcontent]

[wptabtitle] Continue to…[/wptabtitle]

Continue to Photoscan to ArcGIS

[wptabcontent]

[/wptabs]

Posted in Workflow

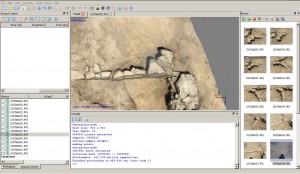

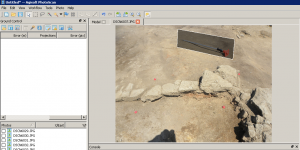

PhotoScan – Basic Processing for Photogrammetry

This series will show you how to create 3d models from photographs using Agisoft Photoscan and Esri ArcGIS.

Hint: You can click on any image to see a larger version.

Many archaeological projects now use photogrammetric modeling to record stratigraphic units and other features during the course of excavation. In another post we discussed bringing photogrammetric or laserscanning derived models into a GIS in situations where you don’t have precise georeferencing information for the model. In this post we will demonstrate how to use bring a photogrammetric model for which georeferenced coordinates are available, using Agisoft’s Photoscan Pro and ArcGIS.

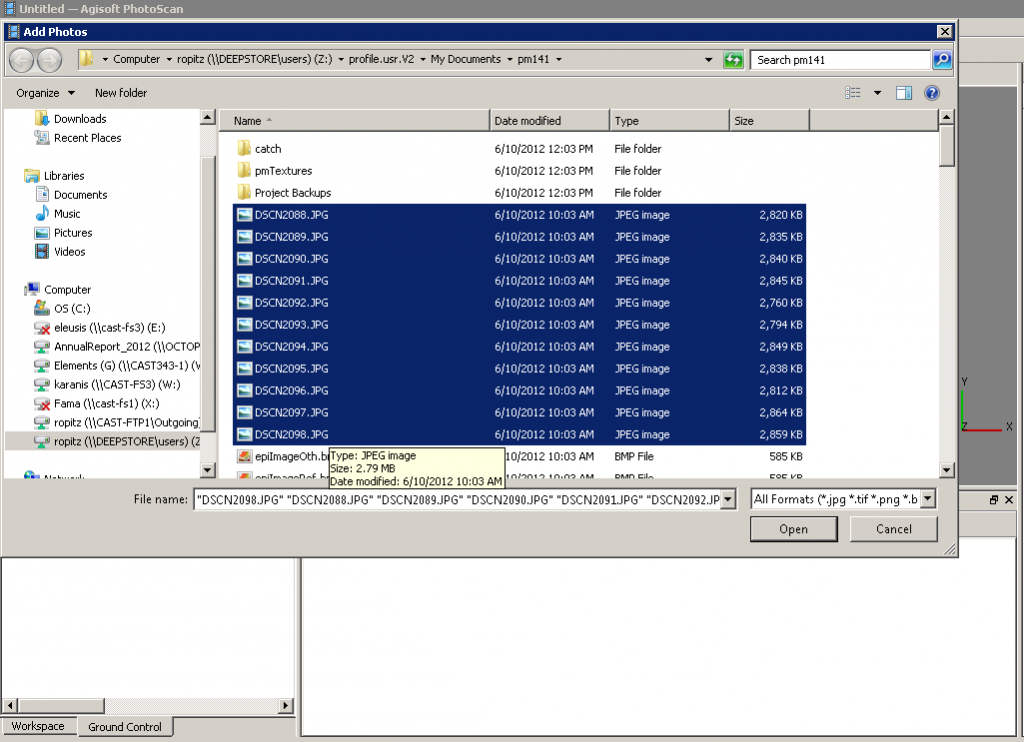

[wptabs mode=”vertical”] [wptabtitle] Load Photos[/wptabtitle] [wptabcontent]Begin by adding the photos used to create the model to an empty project in Photoscan.

[/wptabcontent]

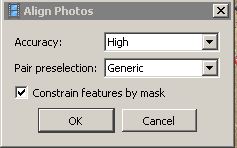

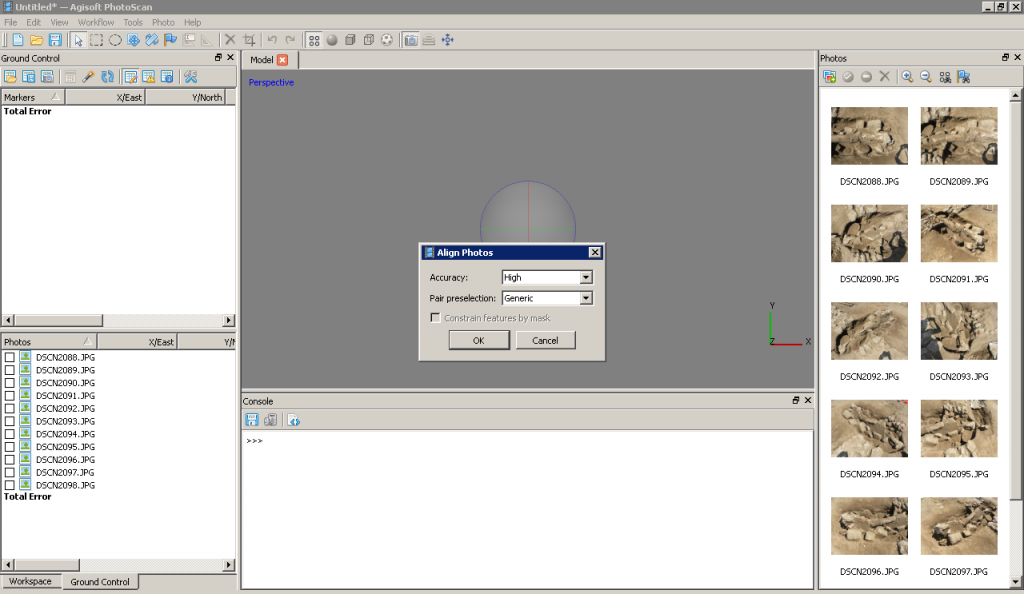

[wptabtitle] Align Photos[/wptabtitle] [wptabcontent]Following the Photoscan Workflow, next align the images. From the menu at the top choose ‘Workflow’>’Align Images’. A popup box will appear where you can input the alignment parameters. We recommend selecting ‘High’ for the accuracy and ‘Generic’ for the pair pre-selection for most convergent photogrammetry projects.

[/wptabcontent]

[wptabtitle] A choice[/wptabtitle] [wptabcontent]At this point there are two approaches to adding the georeferenced points to the project. You can place the points directly on each image and then perform the bundle adjustment, or you can build geometry and then place the points on the 3d model, which will automatically place points on each image, after which you can adjust their positions. We normally follow the second approach, especially for projects where there are a large number of photos.

[/wptabcontent]

[wptabtitle]Build Geometry[/wptabtitle]

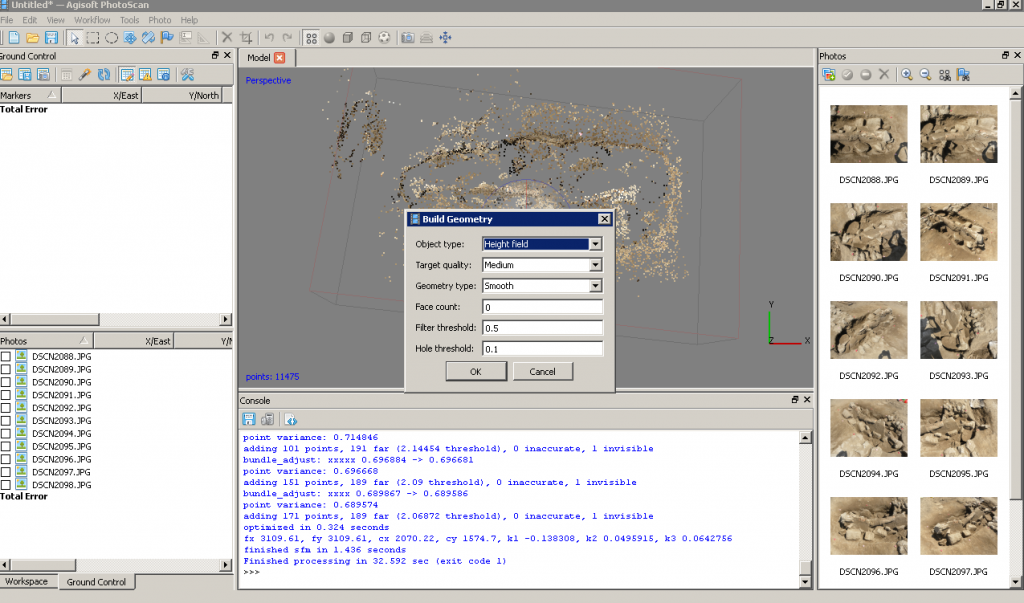

[wptabcontent]Under ‘Workflow’ in the main menu, select ‘Build Geometry’. At this point we don’t need to build an uber-high resolution model, because this version of the model is just going to be used to place the markers for the georeferenced points. A higher resolution model can be built later in the process if desired. Therefore either ‘Low’ or ‘Medium’ are good choices for the model resolution, and all other parameters may be left as the defaults. Here we have selected ‘Medium’ as the resolution.

[wptabtitle]Get the georeferenced points[/wptabtitle]

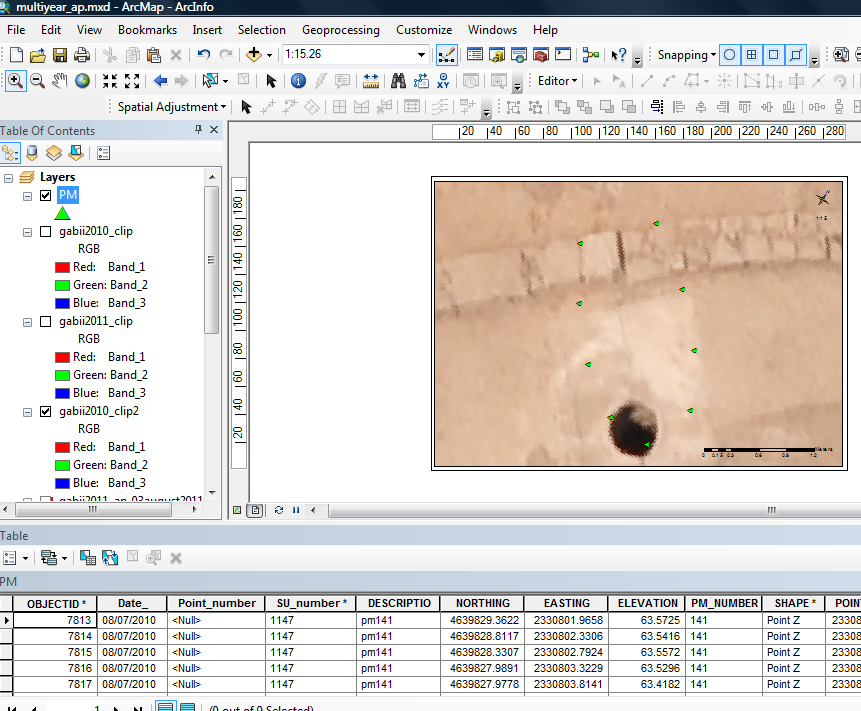

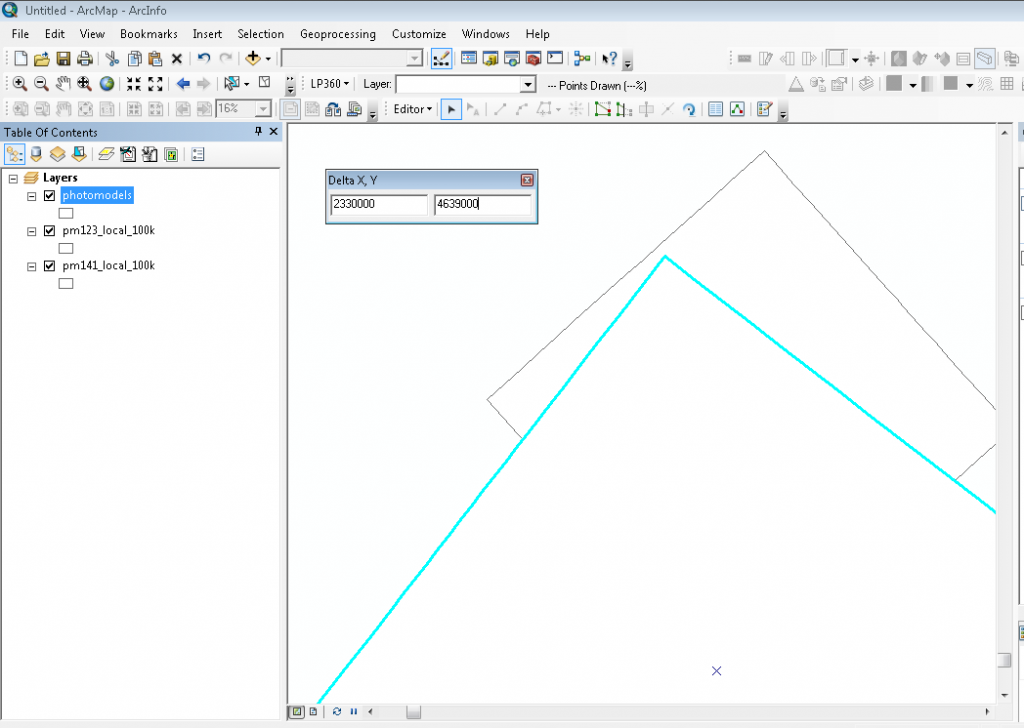

[wptabcontent]When the photos for this model were taken, targets were places around the feature (highly technical coca-cola bottle caps!) and surveyed using a total station. These surveyed targets are used to georeference the entire model. In this project all surveyed and georeferenced points are stored in an ArcGIS geodatabase. The points for this model are selected using a definition query and exported from ArcGIS.

[wptabtitle]Add the georeferenced points[/wptabtitle]

[wptabcontent]On the left you have two tabbed menus, ‘Workspace’ and ‘Ground Control’. Switch to the the ‘Ground Control’ menu. Using the ‘Place Markers’ tool from the top menu, place a point on each surveyed target. Enter the corresponding coordinates from the surveyed points through the ‘Ground Control’ menu. Be careful to check that the northing, easting and height fields map correctly when importing points into Photoscan, as they may be in a different order than in ArcGIS.

[wptabtitle]Local coordinates and projections[/wptabtitle]

[wptabcontent] In practice we have found that many 3d modelling programs don’t like it if the model is too far from the world’s origin. This means that while Photoscan provides the tools for you to store your model in a real world coordinate system, and this works nicely for producing models as DEMs, you will need to use a local coordinate system if you want to produce models as .obj, .dae, .x3d or other modeling formats and work with them in editing programs like Rapidform or Meshlab. If your surveyed coordinates involve large numbers e.g. UTM coordinates, we suggest creating a local grid by splicing the coordinates so they only have 3-4 pre decimal digits. [/wptabcontent]

[wptabtitle]Bundle Adjust – Another Choice[/wptabtitle]

[wptabcontent]After all the points have been placed select all of them (checks on). If you believe the accuracy of the model is at least three time greater than the accuracy of the ground control survey you may select ‘update’ and the model will be block shifted to the ground control coordinates. If you believe the accuracy of the ground control survey is near to or greater than the accuracy of the model, you should include these points in your bundle adjustment to increase the overall accuracy of the model. To do this select ‘optimize’ from the ‘Ground Control’ menu after you have added the points. After the process runs, you can check the errors on each point. They should be less than 20 pixels. If the errors are high, you can attempt to improve the solution by turning off the surveyed points with the highest error, removing poorly referenced photos from the project, or adjusting the location of the surveyed points in individual images. After adjustments are made select ‘update’ and then ‘optimize’ again to reprocess the model.

[wptabtitle] Continue to…[/wptabtitle]

Continue to PhotoScan – Building Geometry & Texture for Photogrammetry

[wptabcontent]

[/wptabs]

Posted in Workflow

Tagged ArcGIS, Data Processing, Modeling, Photogrammetry, Photoscan

Acquire External Control with Trimble 5700/5800

This page is a guide for acquiring external control for close range photogrammetry using Trimble survey grade GPS.

Hint: You can click on any image to see a larger version.

[wptabs mode=”vertical”]

[wptabtitle] Prepare for Survey[/wptabtitle]

[wptabcontent]

- Begin metadata process

- Choose a method for documenting the project (e.g. notebook, laptop)

- Fill in known metadata items (e.g. project name, date of survey, site location, etc.)

- Create a sketch map of the area (by hand or available GIS/maps)

- Choose and prepare equipment

- Decide what equipment will best suite the project

- Test equipment for proper functioning and charge/replace batteries

[/wptabcontent]

[wptabtitle] Equipment Setup[/wptabtitle]

[wptabcontent]

- Base station

- Setup and level the fixed height tripod over the point of your choice

- Attach the yellow cable to the Zephyr antenna

- Place the Zephyr antenna on top using the brass fixture and tighten screw

- Attach the yellow cable to the 5700 receiver

- Attach the external battery to the 5700 receiver (if using)

- Attach the data cable to the TSCe Controller and turn the controller on

- Create a new file and begin the survey

- Disconnect TSCe Controller

- Rover

- Put two batteries in the 5800

- Attach the 5800 to the bipod

- Attach TSCe Controller to bipod using controller mount

- Connect data cable to 5800 and TSCe Controller

- Turn on the 5800 and controller

- Create a new project file (to be used all day)

[/wptabcontent]

[wptabtitle] Collecting Points[/wptabtitle]

[wptabcontent]

- Have documentation materials ready

- As you collect points, follow ADS standards

- Base station

- Once started, the base station will continually collect positions until stopped

- When you’re ready to stop it, connect the TSCe controller to the receiver and end the survey

- Rover

- When you arrive at a point you want to record, set the bipod up and level it over the point

- Using the controller, create a new point and name it

- Start collecting positions for the point and let it continue for the appropriate amount of time

- Stop collection when time is reached and move to next position

[/wptabcontent]

[wptabtitle] Data Processing[/wptabtitle]

[wptabcontent]

- Have documentation materials ready

- As you process the data, follow ADS standards

- Transfer data

- Use Trimble Geomatics Office (TGO) to transfer data files from the TSCe Controller and the 5700 receiver to the computer

- Calculate baselines

- Use TGO to calculate baselines between base station and rover points

- Apply adjustment and export points

[/wptabcontent]

[/wptabs]

Posted in GPS, Hardware, Setup Operations, Setup Operations

Tagged Centimeter, Data Collection, GPS, GPS model 5700, GPS model 5800, GPS Pathfinder Office, Photogrammetry, Sub-meter, Trimble GPS, Workflow

Pathfinder Office "How To" Guide

This page demonstrates how to create a data dictionary in Pathfinder Office, transfer it to a GPS receiver, transfer data from the receiver to Pathfinder Office, differentially correct the data, and export it for use in ArcGIS.

Hint: You can click on any image to see a larger version.

[wptabs mode=”vertical”]

[wptabtitle] Create Data Dictionary[/wptabtitle]

[wptabcontent]

A data dictionary makes GPS mapping easier by allowing you to predefine and organize point, line, and area features and their associated attributes. The feature and attribute examples in this guide are used to demonstrate how to use the Data Dictionary Editor in Pathfinder Office and to demonstrate the types of parameters that can be set.

To begin, open Pathfinder Office and create a new project. Make sure that the outputs are going to your preferred drive and file location. Click on “Utilities” located along the menu bar. Choose “Data Dictionary Editor” and provide an appropriate title for your data dictionary. For the purposes of this guide, the name of the data dictionary is “sample.”

Next, you may define the features to be included in the data dictionary. The features will belong to one of the following feature classes: point, line, or area.

[wptabtitle] Point Feature[/wptabtitle]

[wptabcontent]

Create a point feature by selecting “New Feature.” The feature classification will be “point.” In this example, the point features that we want to map are bus stops. So, the feature name will be “BusStops.”

Under the “Default Settings” tab, choose a logging interval of 1 second and set minimum positions to 120. The logging interval determines how often a GPS position is logged. Acquiring at least 120 positions per point feature will ensure better accuracy (the positions are averaged to determine location). During data collection, the receiver will give a warning message if you attempt to stop logging the feature before 120 positions have been recorded. When finished with the parameters on these tabs, press OK.

To set attribute information to be recorded with the mapped feature, select “New Attribute.” A window will open allowing you to select the type of attribute you wish to define. In this example we would like to name the bus route. Therefore the attribute type will be text. Choose “Text” and enter “RouteName” in the Name field. Note that the Length field is important because the value specifies the number of characters that can be entered when defining the attribute (be sure that the value is sufficient for the length needed). In the New Attribute dialog box, there is an option to require field entry upon creation. Selecting this option will ensure that you enter the attribute upon creation of the feature during data collection. Click OK to save the attribute – you will see it appear in the Attributes window in the Data Dictionary Editor.

You may now define another attribute type. For example, we may wish to record how many people were at the bus stop at the date and time that the feature was mapped. In this case, we want to select both “Date” and “Time” from the New Attribute Type window. To record the number of people, a numeric attribute is needed. After choosing “Numeric,” enter “# of people” in the name field. Note that a minimum and maximum value should be set for the number of people possible (for example, a minimum of 0 and max of 50). You must also set a default number that is within the range of the minimum and maximum values. If you do not update this attribute during data collection, the default value will be used.

Finish setting all of the desired attributes for the point feature and then close the New Attribute Type dialog box before creating the next feature in the data dictionary.

[wptabtitle] Line Feature[/wptabtitle]

[wptabcontent]

Create a line feature by selecting “New Feature.” The feature classification will be “line.” In this example, the line features that we want to map are sidewalks. So, the feature name will be “Sidewalks.”

Under the Default Settings tab, choose a logging interval of 5 seconds. Logging one position every 5 seconds should be sufficient when collecting a feature while walking. You may want to experiment with different values here to test the accuracy of your results. When finished with the parameters on these tabs, press OK.

To set attribute information to be recorded with the mapped feature, select “New Attribute.” A window will open allowing you to select the type of attribute you wish to define. In this example we would like to specify the type of material that the sidewalk is composed of (cement, asphalt, or unpaved). The easiest way to record this attribute type is with a menu. Choose “Menu” and enter “Material” in the Name field. Under “Menu Attribute Values,” press the “New…” button. Type “cement” in the Attribute Value field and press “Add.” Cement will appear under “Menu Attribute Values” and the New Attribute Value – Menu Item window will clear. Next type “asphalt” in the Attribute Value field and press “Add.” Finally “unpaved” may be entered in the Attribute Value field. Press “Add” and then close the New Attribute Value – Menu Item window. To require this attribute to be entered upon creation of the feature, select the “Required” option under Field Entry. Selecting this option will ensure that you enter the attribute upon creation of the feature during data collection.

Press OK to save the attribute before defining the next feature.

[wptabtitle] Area Feature[/wptabtitle]

[wptabcontent]

Create an area feature by selecting “New Feature.” The feature classification will be “area.” In this example, the area features that we want to map are parking lots. So, the feature name will be “ParkingLot.” Under the Default Settings tab, choose a logging interval of 5 seconds. Logging one position every 5 seconds should be sufficient when collecting a feature while walking. You may want to experiment with different values here to test the accuracy of your results. When finished with the parameters on these tabs, press OK.

To set attribute information to be recorded with the mapped feature, select “New Attribute.” A window will open allowing you to select the type of attribute you wish to define. In this example we would like to record the number of handicapped parking spaces in the parking lot. To record the number of handicapped parking spaces, a numeric attribute is needed. After choosing “Numeric,” enter “handicapped spaces” in the name field. Note that a minimum and maximum value should be set for the possible number of handicapped parking spaces(for example, a minimum of 0 and max of 20). You must also set a default number that is within the range of the minimum and maximum values. If you do not update this attribute during data collection, the default value will be used.

Finish setting all of the desired attributes for the area feature and then close the New Attribute Type dialog box.

[wptabtitle] Save Data Dictionary[/wptabtitle]

[wptabcontent]

To save the data dictionary, choose “File” in the menu bar of the Data Dictionary Editor and select “Save As.” Be sure to save the .ddf (data dictionary file) on your preferred drive and file location. The Data Dictionary Editor may then be closed. You are now ready to transfer the data dictionary to the GPS receiver.

[wptabtitle] Transfer .ddf to GPS Receiver[/wptabtitle]

[wptabcontent]

Open either Active Sync (Windows XP) or Windows Mobile Devices (Windows 7) on the computer. Turn on the GPS receiver and then plug it into a USB port on the computer. It should automatically connect to the computer. It is recommended to NOT sync the receiver with the computer.

Open Pathfinder Office (PFO) while the GPS receiver is connected to the computer. In PFO, go to Utilities > Data Transfer > select the “Send” tab > select “Add” > Data Dictionary > the file defaults to the last .ddf that was created (if not – browse to where the .ddf is located) > select the .ddf and click “Open.”

Under Files to Send, select the file by clicking it (it will become highlighted) > Transfer All

Once the data dictionary has been successfully transferred, you may close out of PFO.

You are now ready for data collection!

[wptabtitle] Transfer Data from Receiver to PFO[/wptabtitle]

[wptabcontent]

After data collection, transfer the data from the receiver to Pathfinder Office (PFO) by first connecting the GPS receiver to the computer. Open Pathfinder Office and then open the project.

Within PFO, choose Utilities > Data Transfer > Add > Data File. Select the files to transfer and choose Open. Highlight the file(s) and choose Transfer all. Close the data transfer box once the file(s) successfully transferred.

View your file by opening the Map (View > Map) and then choosing File > Open > and then selecting the file.

At this point, you may wish to improve the accuracy of the data through differentially correction.

[wptabtitle] Differential Correction[/wptabtitle]

[wptabcontent]

To differentially correct your features, complete the following steps:

Open the data in PFO > go to Utilities > Differential Correction > Next > Auto Carrier and Code Proc > Next > Output Corrected and Uncorrected > Use smart auto filtering > Re-correct real-time positions > OK > Next > Select your nearest Base Provider.

A report showing the accuracy of the results will be generated after differential correction has been completed. The differentially corrected data may be exported for use in other programs including ArcGIS.

[wptabtitle] Export Data for use in ArcGIS[/wptabtitle]

[wptabcontent]

To export the data from PFO, select Utilities > Export. Be sure the Output Folder is set to where you want the output file located (and be sure to remember the file path).

Export as Sample ESRI Shapefle Setup > OK.

Make sure that you are also exporting the uncorrected positions (select “Properties…” > select the “Position Filter” tab > check the box next to “Uncorrected” under Include Positions that Are > OK). This will ensure that positions that have not been corrected will also be exported.

Continue with the export even if no ESRI projection file has been found.

PFO can be closed once file has been successfully exported.

[/wptabs]

Octocopter – Check List

Posted in Uncategorized

Ocotcopter – Setup Operation

Posted in Uncategorized

Using TerraSync: The Basics

This page will show you how to configure a GPS receiver with TerraSync and how to set and navigate to waypoints.

Hint: You can click on any image to see a larger version.

This guide is written for use with TerraSync v.5.41. The instructions vary only slightly for earlier versions of the software. Reference the tab titled, Menu Hierarchy to become familiar with the terminology used in the instructions.

[wptabs mode=”vertical”]

[wptabtitle] Menu Hierarchy[/wptabtitle]

[wptabcontent]

The TerraSync menu system hierarchy is as follows: sections, subsections, buttons, and fields (click on image below). The information provided in the tabs throughout this series will utilize this terminology.

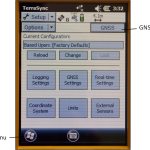

[wptabtitle] Start Terra Sync and Connect to GNSS[/wptabtitle]

[wptabcontent]

Turn on the receiver by pressing the power button. Start TerraSync by opening the Start menu and selecting TerraSync from the menu options. In order to receive information from GNSS satellites, the GPS unit must be connected to the GNSS receiver. To connect to the GNSS receiver, go to the Setup section. Either expand the Options dropdown menu and choose “Connect to GNSS” or press the GNSS button in the upper-right of the screen.

[wptabtitle] Configure GNSS Settings[/wptabtitle]

[wptabcontent]

There are a variety of ways to configure the receiver for data collection under the Setup section in TerraSync. Open each of the six menus to verify that the parameters are set to the desired values. The following are a few parameters to be aware of:

Antenna Settings (under Logging Settings): If you are holding the receiver during data collection and not using an external antenna, you may set the antenna height to 1 meter (which is approximately the height of the receiver above the ground) and choose “Internal” under the Type dropdown menu. When using an external antenna, set the antenna height to the height of the tripod (or whatever height the antenna will be from the ground) and remember to choose the appropriate antenna from the Type dropdown menu.

Real-time Settings: Generally we set Choice 1 to Integrated SBAS, which provides corrections in real-time when a SBAS satellite is available. Set Choice 2 to Use Uncorrected GNSS. If Wait for Real-time is selected, only positions that have been differentially corrected will be used (meaning you may not be able to collect a position unless the receiver is able to make a real-time correction).

Coordinate System: Typically we use Latitude/Longitude with the WGS 1984 datum. However, there may be instances in which you will want to change the coordinate system.

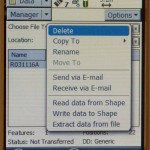

[wptabtitle] Deleting Data[/wptabtitle]

[wptabcontent]

To delete data from the receiver, open the Data section and File Manager subsection. Expand the Choose File Type dropdown menu to select the type of file to delete. Note: It is recommended not to delete Geoid files unless necessary. Select the file you wish to delete by tapping on it (it will be highlighted in blue when it is selected). Press the Options button and choose Delete.

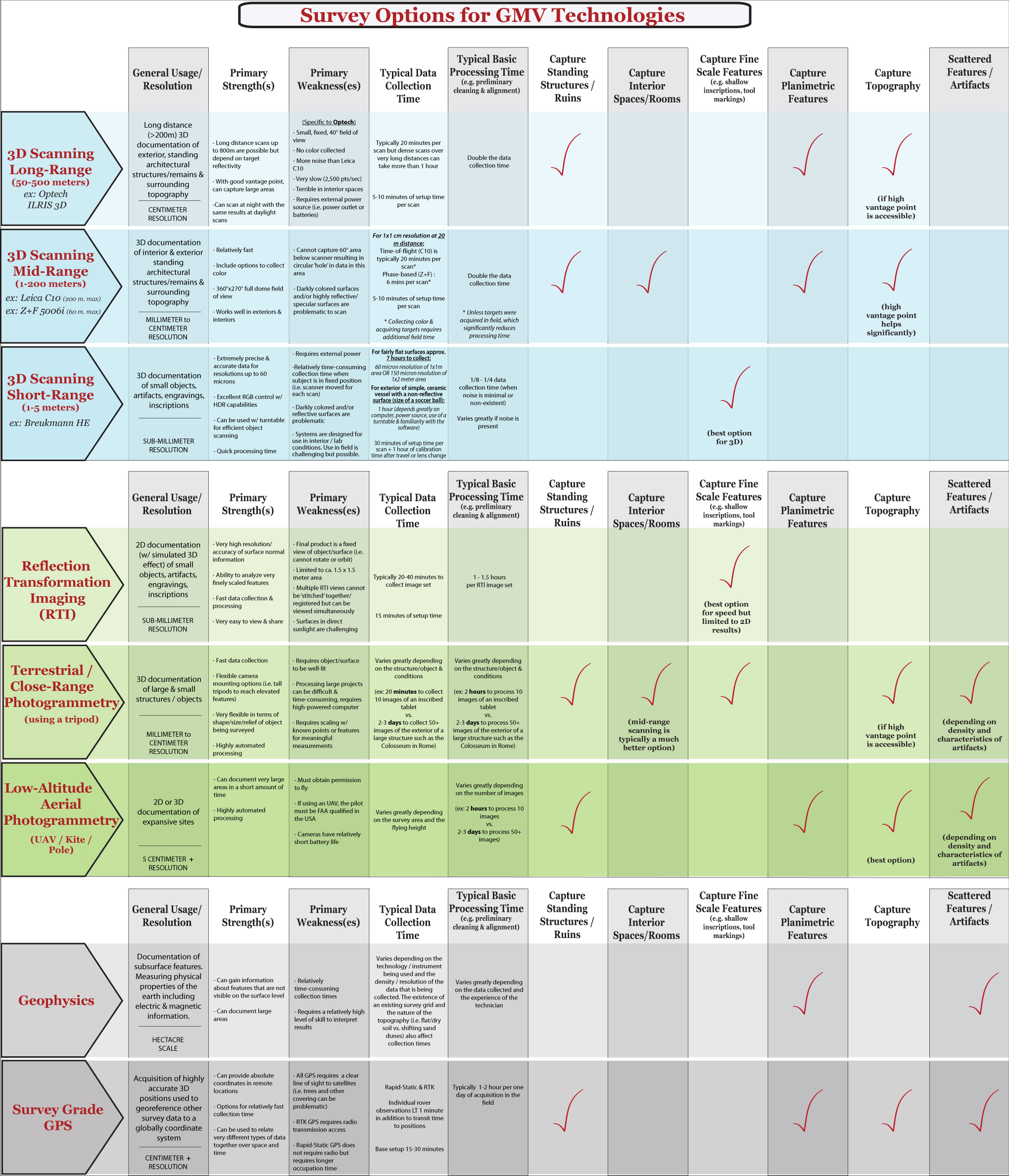

[wptabtitle] Setting Waypoints[/wptabtitle]

[wptabcontent]

To set waypoints on the receiver, open the Navigate section and Waypoint subsection. Tap the New button at the bottom of the screen (note: if a waypoint file is currently open, you must close it before creating a new file – Options > Close File). Give the file a name (or leave the default name) and tap Done. Expand the Options dropdown menu and choose New.

If you have known coordinates to which you would like to navigate, you may manually enter the coordinates. If you wish to mark a GPS position as a waypoint, expand the Create From dropdown menu and choose GNSS. This option auto-fills the coordinates based on the current GPS position.

[wptabtitle] Navigating to a Waypoint[/wptabtitle]

[wptabcontent]

To use the receiver to navigate to an established waypoint, open the Navigate section and Waypoint subsection. Select the waypoint you want to navigate to by tapping the box next to the waypoint (a check will appear in the box). Expand the Options dropdown menu and select Set Nav Target.

Open the Navigate subsection. You should see the name of the waypoint listed at the top of the screen just below the satellite icon. You are now ready to begin navigating. You must start moving in order for the receiver to become oriented in space and give you directions. It doesn’t matter which direction, just start moving. You may adjust the navigation settings by expanding the Options dropdown menu and selecting Navigation Options.

After reaching a waypoint, you may expand the Options dropdown menu while in the Navigate subsection and choose Goto Next Unvisited Waypoint. Alternatively, you may clear the waypoint by opening the Waypoints subsection, expanding the Options dropdown menu and selecting Clear Nav Target. You may then set your next navigation target.

[/wptabs]

Posted in GPS, Setup Operations, Setup Operations, Trimble GeoExplorer, Trimble Juno, Uncategorized

Tagged GeoExplorer, Juno, terrasync, waypoint

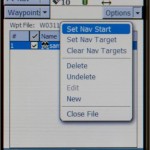

Survey Options for GMV Technologies – Summary Table

Click on the image below to activate the interactive guide. The table summarizes the technologies referenced on the GMV, their typical applications and properties as experienced in the projects and workflows within this site.

Posted in Uncategorized

Leica GS15 RTK: Configuring a GS15 Receiver as a Rover

This page will show you how to use a Leica CS15 to configure a GS15 to be a rover for an RTK GPS survey.

Hint: You can click on any image to see a larger version.

[wptabs mode=”vertical”]

[wptabtitle] Power up the GS15 Rover[/wptabtitle]

[wptabcontent]

Power on the second GS15 receiver – this will be the Rover – and wait for it to start up, then make sure the RTK Rover (arrow pointing down) LED lights up green.

[/wptabcontent]

[wptabtitle] Configure CS15 for Rover[/wptabtitle]

[wptabcontent]

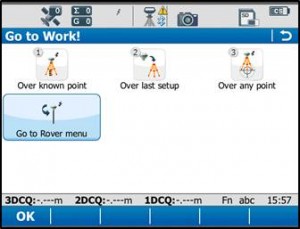

You should probably be at the Base Menu and “Go to Work” (on right) If not you may be in the Job: “Your name” menu and the “Go to Work” button has “Survey & State Points” and “Start Base” as shown below on left.

If you have the left screen (Job: TEST –or whatever you named the job– and “Start Base Station”) you simply need to click on the Go to work” and then select “Go to Base menu” – that will take you to the situation shown on the RIGHT image.

[/wptabcontent]

[wptabtitle] Go to Rover Menu[/wptabtitle]

[wptabcontent]

From the main menu, tap “Go to Work!” then “Go to Rover menu.” The unit will take a few moments to connect to the GS15 rover unit. Note: Tap “No” if the Bluetooth connection Warning appears.

[/wptabcontent]

[wptabtitle] Satellite Tracking Settings[/wptabtitle]

[wptabcontent]

Once in the rover menu, tap “Instrument” → “GPS Settings” → “Satellite Tracking.”

In the Tracking tab, make sure that GPS L5 and Glonass are checked on. Also make sure “Show message & audio warning when loss of lock occurs” is checked. The Advanced tab allows you to define other options for the job, and it is appropriate to use the system default settings. Tap OK when finished.

[/wptabcontent]

[wptabtitle]Quality Control Settings[/wptabtitle]

[wptabcontent]

On the rover main menu, tap “Instrument” → “GPS Settings” → “Quality Control.” Here you can define your specifications for RTK point collection with the rover unit. For high precision survey work, it is a good idea to set the tolerance to less than or equal to 2 centimeters, as this will prevent the logging of an RTK point with error greater than this threshold. Tap OK to return to the main menu when finished.

[/wptabcontent]

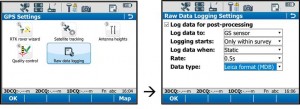

[wptabtitle]Raw Data Logging Settings[/wptabtitle]

[wptabcontent]

On the rover main menu, tap “Instrument” → “GPS Settings” → “Raw Data Logging.” Be sure the box for “Log data for post processing is checked on, then choose where you would like the data to be logged to. We can log to either the receiver’s (GS15) SD card or the controller’s (CS15) SD card. Make sure the controller option has been selected from the drop down list. The remaining options are up to the user to define. Tap OK when done.

[/wptabcontent]

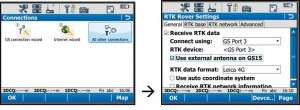

[wptabtitle]RTK Rover Settings: General Tab[/wptabtitle]

[wptabcontent]

On the rover main menu, tap “Instrument” → “Connections” → “All other connections.” On the GS connections tab, tap the RTK Rover connection to highlight it, and then tap “Edit” at the bottom of the screen.

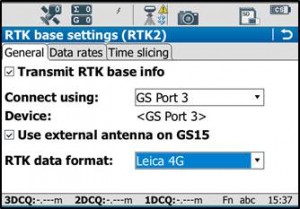

On the General tab, make sure the “Receive RTK data” box is checked on, then verify the following settings:

Connect Using: GS Port 3

RTK Device: Pac Crest ADL (note: different than shown in image above)

(Check on “Use external antenna on GS15”)

RTK Data format: Leica 4G

(Leave the remaining 2 boxes unchecked)

[/wptabcontent]

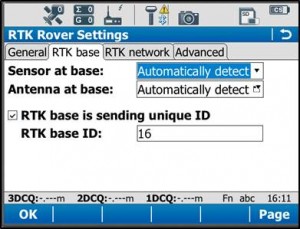

[wptabtitle]RTK Rover Settings: RTK Base Tab[/wptabtitle]

[wptabcontent]

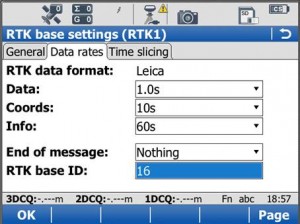

On the RTK base tab, verify the following settings:

Sensor at base: Automatically Detect

Antenna at base: Automatically Detect

(Check on “RTK base is sending unique ID”)

RTK base ID: 16

Tap OK in the bottom left corner. Tap OK if a warning about the antenna pops up. Now tap “Cntrl” at the bottom of the screen. Ensure the following settings are present:

Radio Type: Pac Crest ADL

Channel: 1

Actual frequency: 461.0250 MHz

Tap OK. Tap OK again to return to the main menu.

[/wptabcontent]

[wptabtitle]Finish Setup[/wptabtitle]

[wptabcontent]

At this point the equipment is set up. Now you can begin to take RTK points. You can begin your work or you can power down the CS15 by holding the power button down until the power options menu appears, and select “Turn off.” You can also power down the GS15 receiver by holding the power button until the LEDs flash red.

[/wptabcontent]

[wptabtitle]Continue To…[/wptabtitle]

[wptabcontent]

Continue to “Leica GS15: Tripod Setup”

[/wptabcontent]

[/wptabs]

Leica GS15 RTK: Configuring a GS15 Receiver as a Base

This page will show you how to use a Leica CS15 to configure a GS15 to be a base for an RTK GPS survey.

Hint: You can click on any image to see a larger version.

[wptabs mode=”vertical”]

[wptabtitle] Go to Base Menu[/wptabtitle]

[wptabcontent]

Look at the real-time status icon at the top of the CS15 controller screen. If you see a jagged arrow pointed up, the CS15 is in the base menu, where you want to be. If the arrow is pointed down, the CS15 is in the rover menu. To switch to the base menu, tap “Go to Work!” then tap “Go to Base menu.”

[/wptabcontent]

[wptabtitle] Satellite Tracking Settings[/wptabtitle]

[wptabcontent]

Once in the base menu, tap “Instrument” → “Base Settings” → “Satellite Tracking.”

In the Tracking tab, make sure that GPS L5 and Glonass are checked on. Also make sure “Show message & audio warning when loss of lock occurs” is checked. The Advanced tab allows you to define other options for the job, and it is appropriate to use the system default settings. Tap OK when finished. You will return to the Base menu.

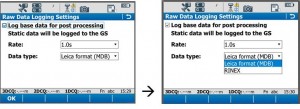

[wptabtitle] Raw Data Logging Settings[/wptabtitle]

[wptabcontent]

On the base menu, tap “Instrument” → “Base Settings” →”Raw Data Logging.”

Make sure the box is checked for logging base data for post processing. Also confirm that the Data Type is in ‘RINEX’ format. Tap OK.

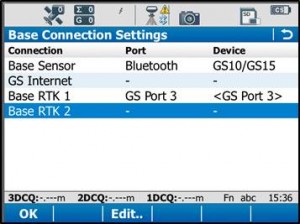

[wptabtitle] Enter Base Connection Settings[/wptabtitle]

[wptabcontent]

Back on the base menu, tap “Instrument” → “Base Connections” → “All other connections.”

Tap the Base RTK 2 connection to highlight it, and then tap “Edit” at the bottom of the screen.

[wptabtitle] Base Connection Settings: General Tab[/wptabtitle]

[wptabcontent]

In the General tab, ensure the box is checked on for “Transmit RTK base info,” then verify the following settings:

Connect Using: GS Port 3

Device: RTK TEST (not as shown on graphic)

RTK Data Format: Leica 4G

Scroll down to see the checkbox for “Use External antenna on GS15.” Generally, you want to use the external antenna, so make sure to check this box.

[wptabtitle] Base Connection Settings: Data Rates Tab[/wptabtitle]

[wptabcontent]

On the Data Rates tab, the default settings are generally fine, but double check the RTK base ID. It should be set to 16, and if it is, it can be left that way. Tap OK in the bottom left corner. Tap OK if a warning about the antenna pops up. Now tap “Cntrl” at the bottom of the screen.

Ensure the following settings are present:

Radio Type: Pac Crest ADL

Channel: 1

Actual frequency: 461.0250 MHz

* The “Actual frequency” will be set at “0.0000MHz” until the GS15 and CS15 are connected.

Tap OK. Tap OK again to return to the main menu.

[wptabtitle] Power up the GS15[/wptabtitle]

[wptabcontent]

Power up the GS15 receiver that you will be using as a base station (the one that has the SD card in it) by pressing and holding the power button until the 3 LEDs below it light up. Keep the other GS15 that will act as the rover turned off.

Note: It is important to understand what the buttons, symbols, and LED lights do and/or represent on the GS15 unit. A detailed description of button operation can be found in the Leica “GS10/GS15 User Manual” in section 2.1, pages 20-24. A detailed description of symbols and LEDs can be found in the same manual in section 3.5, pages 64-68. The GS15 is powered down by pressing and holding the power button until the LEDs turn red.

[wptabtitle] Put Base into RTK Mode[/wptabtitle]

[wptabcontent]

Make sure the GS15 unit is in RTK base mode, and set up to broadcast RTK corrections via the radio. To do this, check that the LED beneath the RTK base symbol (arrow pointing up) is lit up green. If the GS15 is in Rover mode (arrow pointing down), you may need to quickly press the function button to change it to Base mode.

[wptabtitle] Connect CS15 to GS15 via Bluetooth[/wptabtitle]

[wptabcontent]

The CS15 should automatically connect to the GS15 unit once it has entered base mode, via Bluetooth. On the CS15, you will see the Bluetooth symbol appear at the top of the screen. On the GS15, the LED beneath the Bluetooth symbol will turn blue.

If the CS15 does not automatically connect with the GS15, you can search for all visible Bluetooth devices. Navigate in the Base menu: ‘Instrument’ → ‘Base connections’ → ‘connect to base’ and click on the “search” button on the bottom of the screen. The “Found Bluetooth devices” screen will appear. The GS15 will be listed under the ‘Name:’ column, named after its serial number. The serial number can be found on a white sticker on the lower side of the GS15, under the battery cover (e.g. “S/N: 1502919”)

You may want to power down the GS15 by pressing and holding the power button until the LEDs turn red if you are in lab configuring but if in field then you can configure the RTK while base is on, BUT – be sure you are connected to the ROVER not the BASE. If you can confirm this by making sure that the Bluetooth connection is the correct serial number.

[wptabtitle] Continue To…[/wptabtitle]

[wptabcontent]

Continue to Part 4 of the series, “Leica GS15 RTK: Configuring a GS15 Receiver as a Rover.”

[/wptabs]

Configuring the CS15 Field Controller

This page will show you how to set the parameters on a Leica CS15 field controller in preparation for GPS survey.

Hint: You can click on any image to see a larger version.

[wptabs mode=”vertical”]

[wptabtitle] Power up the CS15[/wptabtitle]

[wptabcontent]

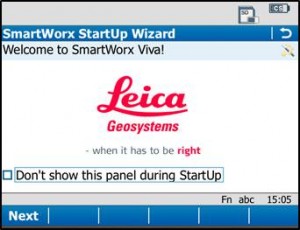

Power on the CS15 controller by pressing and holding the power button until the screen turns on. The operating system will take a minute to boot up, and will automatically start up the Leica Smartworks interface. Click “No” if the bluetooth connections Warning message pops up.

You may get the Welcome screen. If so, click “Next”.

[/wptabcontent]

[wptabtitle] Choose Survey Instrument[/wptabtitle]

[wptabcontent]

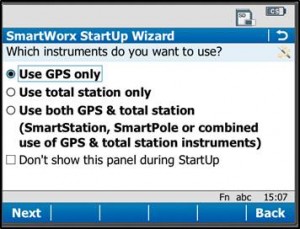

After powering on the controller, you may see the SmartWorx StartUp wizard. Confirm that “GPS Instrument” is selected in the drop-down in the “First Measure with:” menu, or the “use GPS only” radio box.

[wptabtitle]Choose a Job [/wptabtitle]

[wptabcontent]

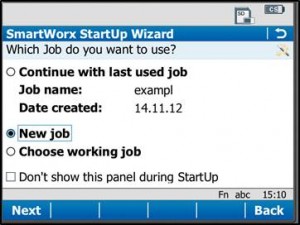

If these have been turned off by previous users, then the first screen that appears will allow you to “Continue with last used job,” create a “New Job,” or “Choose working Job.” For the purposes of this guide, select “New Job” and tap “Next” in the lower left corner.

Note that you can also use the “F” key below each entry. So pressing F1 which is below “Next” is the same as using the stylus and clicking on the touch screen. Pressing F6 is the same as pressing the stylus on Back.

[wptabtitle] Set Job Description[/wptabtitle]

[wptabcontent]

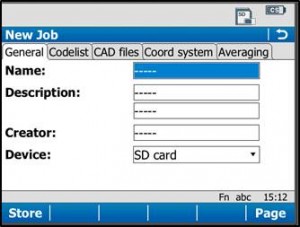

On the General Tab, enter a name for the job, a description, and the name of the person who is creating the job. Be sure to choose “SD Card” for the Device option, as this will result in all the data generated within the job to be saved only to the SD Card.

[wptabtitle]Set Coordinate System[/wptabtitle]

[wptabcontent]

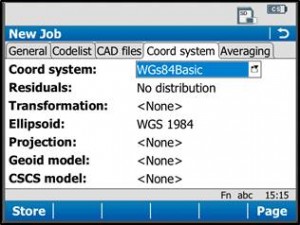

On the “Coord system” tab, be sure the coordinate system is set to “WGS84basic.” If it needs to be changed to this, just tap on the drop down menu, select “WGS84basic,” and tap OK in the lower left corner. You have the ability to create a custom coordinate system if needed (more information can be found in the Leica Technical Manual).

Tap “Store” in the lower left corner to save the job and go to the main menu.

Note: It is beneficial to learn what the icons along the top of the screen represent. For detailed information, see the Leica “Getting Started Guide” section 2.1.2, pages 51-54. Additionally, a brief and simple presentation of the main menu options can be found in the Leica “Getting Started Guide” section 2.1.3, pages 55-57.

[wptabtitle]Continue To…[/wptabtitle]

[wptabcontent]

Continue to Part 3 of the series, “Leica GS15 RTK: Configuring a GS15 Receiver as a Base.”

[/wptabs]

Leica GS15 RTK: Preliminary Setup before Going into the Field

This page will show you the basic instrument components needed for a Leica GS15 RTK GPS survey and will review memory card maintenance.

Hint: You can click on any image to see a larger version.

[wptabs mode=”vertical”]

[wptabtitle] Instrument Components[/wptabtitle]

[wptabcontent]

GS15 GNSS receiver (left) and CS15 field controller (right)

[/wptabcontent]

[wptabtitle] Equipment Check List [/wptabtitle]

[wptabcontent]

Minimum equipment and accessories needed (contents of instrument kit):

- • GS15 receiver (2)

- • CS15 field controller (1)

- • Antennas (2 – one for each GS15)

- • Holder for CS15 (1)

- • Base for telescopic rod (1)

- • Batteries

- 5 or more (two for each GS15 and one for the CS15, more depending on length of survey)

- Confirm that batteries are charged

- • SD cards

- Enough for each instrument (GS15 and CS15)

- Leica recommends 1GB

- Confirm that cards are not locked via the mechanical locks

- • Manuals

- Leica Viva GNSS Getting Started Guide

- Leica GS10/GS15 User Manual

-

Instruments and their locations in the kit.

[/wptabcontent]

[wptabtitle] Memory Card and Data Maintenance [/wptabtitle]

[wptabcontent]

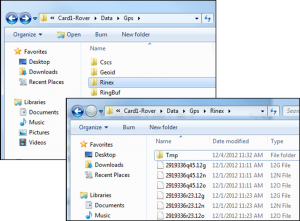

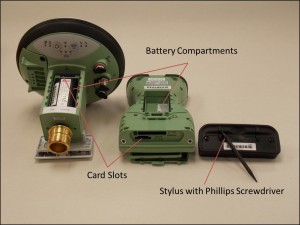

Make sure memory cards have adequate space for your data needs. The GS15 receivers hold one memory card, which can be accessed in the battery compartment beneath the power button. The CS15 controller also holds a memory card, which can be accessed by using the Phillips screwdriver end of the stylus to loosen the screws on top of the unit. These screws are spring loaded and only take about a half turn to loosen.

If you are recording Leica data (for example Leica MDX), the files will be found on the SD card in the DBX directory. If you are recording RINEX, the data will be found in DATA> GPS > RINEX.

- Note: The raw data files have the following format AAAA_BBBB_CCCCCC with the suffix .m00 for raw Leica data (the suffix .12o is for RINEX). The letters are AAAA = last four numbers of the unit’s serial number BBBB is the date in month and day so 0405 is the fourth month (April) and fifth day. CCCCCC is the hour (two digit 24 hour clock) minute and second. So 5:34 and 21 seconds PM is 173421. This is then followed by .m00 if it is RAW. Note that OPUS will now take Leica RAW as well as RINEX.

- *Only delete data from the SD card if it is your own work, or if the original owner has backed up the data. *

[/wptabcontent]

[wptabtitle] Inserting Memory Cards and Batteries [/wptabtitle]

If you plan to record to the controller, place one SD card into the CS15 and secure the top back in place by tightening the screws about a half turn until they lock with the Phillips screwdriver end of the stylus. You do not NEED to record to the controller but it may provide redundancy. Place the other SD card into one of the GS15 receivers. The GS15 that has the memory card will be used as the base station (you may set the rover to write to the controller).

Insert fully charged Lithium Ion batteries into all devices. The GS15 receivers can hold 2 batteries, which are stored below the antenna portion of the unit in the compartments on either side. The battery for the CS15 is located in a compartment on the back. To access this compartment, you will likely have to remove the mounting base first. To do this, slide the red bar to the right in order to unlock the mount. Gently wiggle the mount and pull away from the CS15 to detach it. The battery compartment is now accessible. Replace the mount by popping it back into place and slide the red bar all the way back to left, which locks the mount to the CS15 controller.

[wptabtitle] Continue To… [/wptabtitle]

[wptabcontent]

Continue to Part 2 of the Series, “Leica GS15 RTK: Configuring the CS15 Field Controller.”

[/wptabcontent]

[/wptabs]

Evaluating Objectives for Data

This document will introduce you to some of the initial questions that are posed when evaluating the overall objectives for your data as they relate to processing and future use.

Hint: You can click on any image to see a larger version.

[wptabs style=”wpui-alma” mode=”vertical”] [wptabtitle] INTENDED AUDIENCE OF THIS GUIDE [/wptabtitle]

[wptabcontent]

Who will find this guide useful?

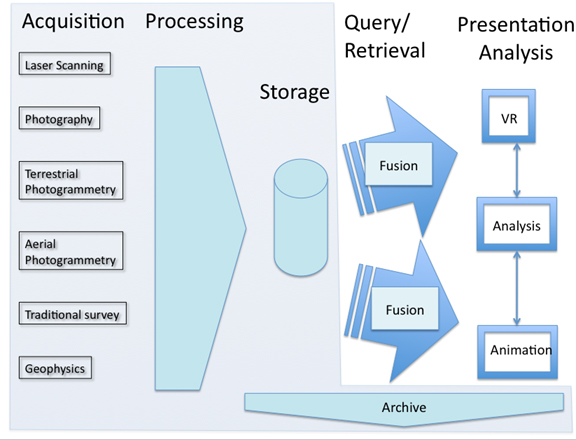

The growing number of digital technologies that allow for rapid and accurate documentation of sites and objects that are described on the GMV and within these guides, can acquire substantial amounts of data in a relatively short time in the field. In general, the resulting data are part of a larger data life cycle structure and are acquired with an appreciation of the wide range of possible future uses.

By the time that you are reading this, we assume that you are familiar with the types of data on the GMV and the types of applications and projects in which CAST uses them as outlined on the Using the GMV page and within individual technology sections. You should now also be familiar with the main ideas within the table Survey Options for GMV Technologies. In order to make use of this guide, you need to have a basic to intermediate understanding of these technologies and ideas.

______________________________________________________________

ADDITIONAL RESOURCES : In projects in which the quality and quantity of data to be collected is still being decided, this document is intended to be used in conjunction with the GMV’s Evaluating the Project Scope Document. Ideally, the ultimate destination of the data would be considered in the planning and collection stages. However, whether you making these decisions and collecting the data yourself or not, we hope that considering the objectives in this document will aid you in relating your data to the ‘big picture’.

The Archaeology Data Service / Digital Antiquity Guides to Good Practice provides much more detail about data management while this document attempts to simplify very complex topics for a more generalized understanding.

[/wptabcontent]

[wptabtitle] OBJECTIVE [/wptabtitle] [wptabcontent]

Goal of this Guide

It is critical to note that the topics discussed here focus ONLY on general, basic processing of data that is necessary to make it intelligible to others and ready for an archive. That said, the data acquisition, processing and archiving referenced here are intended to be part of a larger data life cycle structure with an understanding of possible future uses. It is, of course, impossible to anticipate all future uses to which data may be applied but the objectives considered here are designed to obtain well documented and comprehensive data that can be expected to support a broad range of future applications and analyses, within heritage applications and beyond.

The primary objective of this document is to aid users in evaluating overall goals for data and how they relate to maintaining archivable and reusable data by considering :

I. What is the overall life cycle of the data?

II. How is preparing data for archival quality related to the products produced for end ‘consumption’?

II. What types of error are involved with the data?

III. What are your overall goals for data processing? How does the data evolve over each stage of processing from the original data to final files or products that you are hoping to produce?

[/wptabcontent]

[wptabtitle] DATA LIFE CYCLE [/wptabtitle] [wptabcontent]

What is the Life Cycle of Your Data?

A Documentation Perspective : When properly considered, the life cycle of your data begins before any data is collected. The first concept of the data occurs early in project planning when decisions relating to the data, such as file types, naming conventions, and documentation methods, are made. Many projects and the great majority of heritage recordation efforts move directly from the acquisition and creation efforts to development and presentation of a specific set of work products. In general, here, we instead look at digital heritage and urban recordation data with a focus on a documentation perspective that keeps an ongoing life cycle in mind. This perspective involves multiple technologies in which the initial product is data in an archival quality that allows for a variety of end ‘consumption’ – including display, analysis and presentation products that are not covered here.

Heritage and Modern Environments : It should be noted that, in general, heritage guidelines for documenting these evolving technologies are far more advanced than the standards for projects involving modern environments. As disciplines that deal with modern environments (such as architects, engineers and city planners) become increasingly aware of these technologies and begin utilizing them for building information and daily maintenance/operations objectives, standards for these applications will continue to advance. While ultimate goals for heritage agendas often vary significantly from those goals involved in modern environment agendas, we propose that the basic documentation perspective applies to both. In all applications, if data is collected, processed and documented to meet basic archival quality, that data should be reusable and able to meet future needs.

[/wptabcontent]

[wptabtitle] ARCHIVING vs. CONSUMPTION [/wptabtitle] [wptabcontent]

How are archiving methods related to the end ‘consumption’ of data?

Future Needs : The methods of end ‘consumption’ and the final products to analyze or display your data will be specific to your project. Although final archiving might not be your first priority as you begin processing data, in all projects, we suggest that you maintain consistency and repeatability in processing and storage conventions to allow the data to meet these unknown, future needs. Maintaining consistency and keeping the data’s long-term life cycle in mind will help prepare your project for storing your data and/or meeting archival requirements as you realize these are needed.

Consistency at each stage of processing : All of the technologies on the GMV require basic data processing to make the data even minimally useful. Each technology section includes workflows for basic processing to achieve this minimal useability with some sections delving into more advanced processes. Combining the steps for basic processing with the detailed information on Project Documentation in the Guides to Good Practice will insure that your raw data will be adequate for archival purposes and will be accessible and usable by future investigators. Continuing these methodologies throughout the project will insure that the raw data, the minimally processed data, and comprehensive metadata are archived in such a manner as to remain accessible and to allow the future development of whichever end ‘consumption’ products are chosen.

[/wptabcontent]

[wptabtitle] TYPES OF ERROR [/wptabtitle] [wptabcontent]

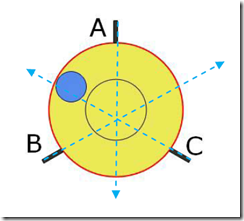

Are accuracy and precision equally important in your data?

This might seem like a strange question. It is tempting to think that you need both highly precise and highly accurate data. Ideally, the equipment being used and the people performing the survey would be perfectly accurate and precise throughout all processes. However, there is error involved in every process and understanding the sources and types of error involved will help you to determine what is acceptable for your purposes and how to track the errors.

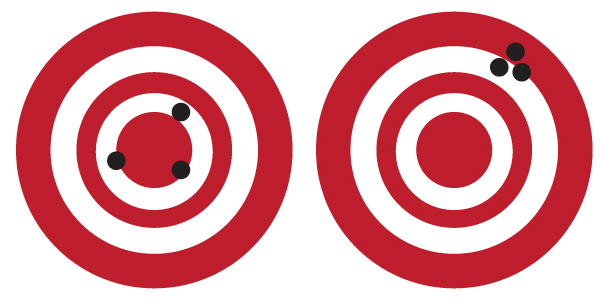

The left shows high accuracy but low precision while the right image shows high precision but low accuracy